Yes, the universal way to store data in TW is tiddler, and every UI will store result as tiddler. Like principles layout and calendar layout does. They can read each other’s data. What a web 3.0!

This conversation has sparked some really fun ideas for me  I could imagine someone running a DnD game could add game details to the system prompt and generate some really interesting story elements on the fly.

I could imagine someone running a DnD game could add game details to the system prompt and generate some really interesting story elements on the fly.

Hi, perhaps this is interesting:

I just stumbled upon langchain which could be usefull to integrate LLMs via node.js.

See How to Integrate AI into Your Node.js Application: A Step-by-Step Guide

Good find  Looking forward to checking out.

Looking forward to checking out.

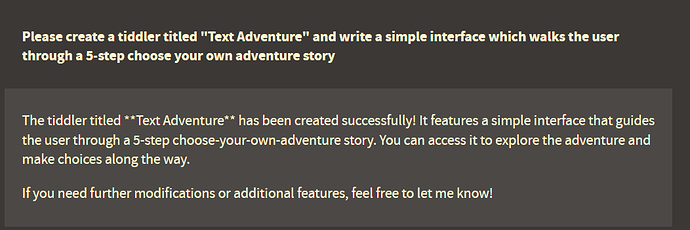

With the latest release of the Expanded Chat GPT widget, I’ve been able to demonstrate some of the possibilities I see for Tiddlywiki using multimodal ML enhancements:

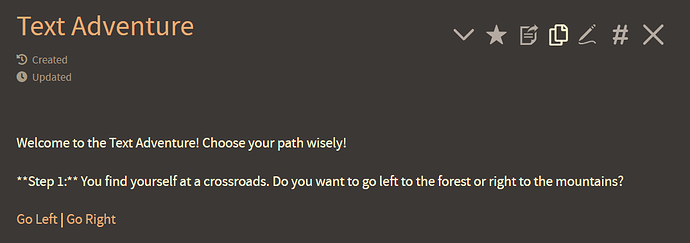

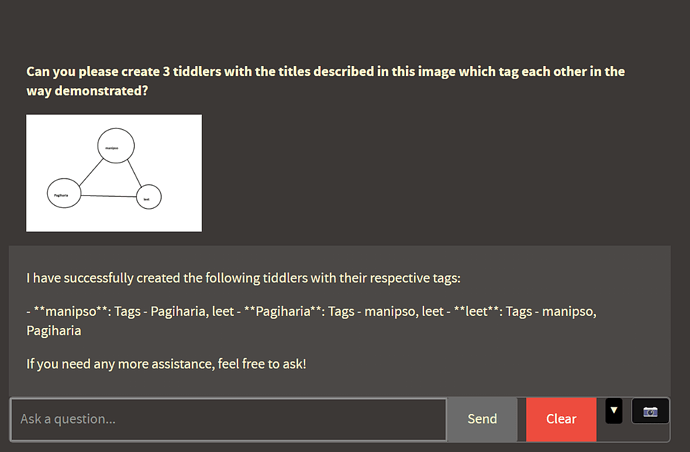

A nonsense chart, created in paint, uploaded through the interface and showing a basic relationship between 3 titles

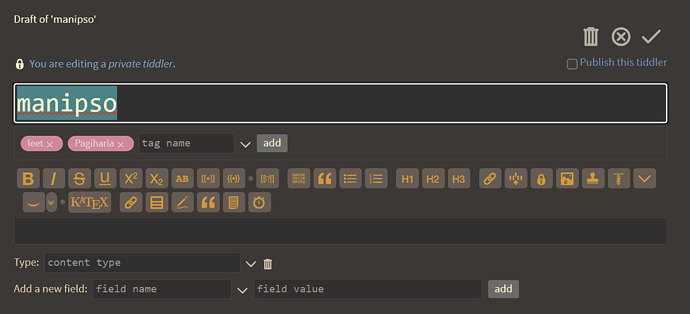

As you can see, nonsense titles were successfully extracted from the image with the relationships successfully reflected through tagging

Tagging is not the only kind of relationship that could be depicted, of course – any visual relationship that you can summarize broadly could be used in this manner to create relationships with backlinks, fields, transclusions, whatever you can imagine.

This might be very helpful if you, like me, value having information represented in different forms, especially for archival

Performs generally pretty well even with shoddy quick sketch and limited context – additional context and consideration would greatly refine the results

This, on top of the agent’s new ability to generate images on request –

– can be a real step up in how users can rapidly process, store, and retrieve information that goes well beyond text.

Wanted to repost @JanJo’s example here, away from the Expanded Chat-GPT topic itself, since it might have wider application than that plugin itself (though I’m certainly interested in exploring the possibilities of using the plugin in this way!)

Will have a look over coffee, @JanJo and get back to you here!

There seems to be something wrong with this translation…

Using the tiddlywiki interface for something like this is definitely interesting! For a project years back I had a wordcount in the editor – something like that could be used to provide a score real-time if a student were actively working on something, and button could trigger a popup with contextual based recommendations, rather than allowing the student to actually edit their content with it.

@JanJo, the usecase you had in mind was for rapidly processing and providing feedback to students on their handwriting quality – that would be an interface that the students would use to get feedback on their own work, or an interface that you would use to rapidly process and sort their work?

langchain is not an good option, things can be written in native JS or use visual tools like n8n / coze / wordware to achieve same feature with less time. I wrote langchain-alpaca when they come out , but I soon discover langchain is badly designed, and is over-abstracted.

BTW, see propersal to implement wordware style feature in TW Add "AI Tools" Plugin by Jermolene · Pull Request #8365 · TiddlyWiki/TiddlyWiki5 · GitHub

I am a bit behind on my understanding for the current state of TiddlyWiki and LLM training, but I would like to get it to the point where when you talk to the chatbot, it has better accuracy for understanding TiddlyWiki syntax.

As far as my personal use case for hooking up my TiddlyWiki data to a LLM, I am mostly interested in using it to synthesize and make connections between my existing notes. Curious how others have prepared their individual tiddlers to use as the knowledge base for their LLM.

Hey @markkerrigan, please check out the Expanded Chat-GPT plugin to see where I’ve gotten with it, and feel free to post here and/or there detailing thoughts on your use cases, as I am formulating next steps  also feel free to ask specific questions about my own process, as I have been developing new habits since implementing AI into my knowledge base.

also feel free to ask specific questions about my own process, as I have been developing new habits since implementing AI into my knowledge base.

I have found there is no need to go through additional training of models, but rather an extensive system prompt going over important Tiddlywiki syntax and explaining its context as an agent within Tiddlywiki has been sufficient to yield consistent results.

Fantastic experiments. Thanks for the inspiration.

Hey @joshuafontany, good to see you online  if you decide to do some experiments yourself, would love to hear about them! And if you decide to try out the Expanded Chat-GPT plugin I’d love to hear how it works for you and how you feel it might be improved

if you decide to do some experiments yourself, would love to hear about them! And if you decide to try out the Expanded Chat-GPT plugin I’d love to hear how it works for you and how you feel it might be improved

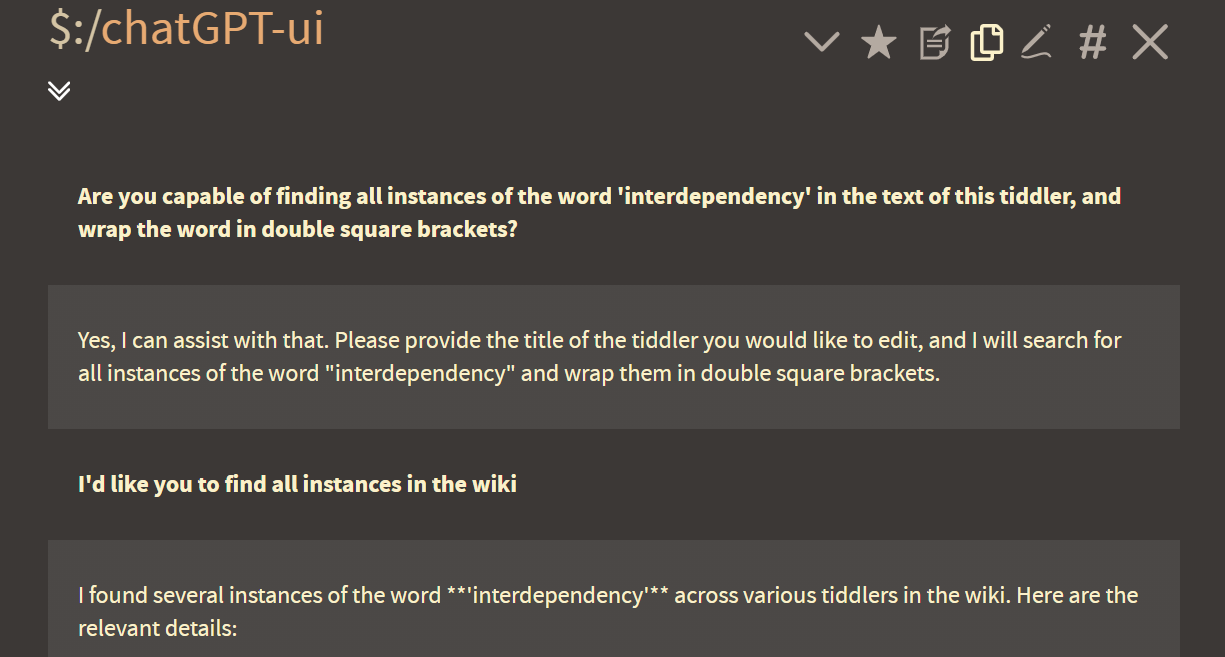

One way I’m experimenting with the interface is to use it for identifying and creating backlinks for tiddlers, especially when I have old notes that may pertain to a newer tiddler.

Here you can see that the agent performing a search for instances of “interdependency” across the entire wiki and wrapping those as backlinks. What I’ve excluded is that the agent also correctly identifies and wraps other forms (aliases) of the term (e.g. interdependence).

Ultimately I’d like to use this kind of functionality to also identify opportunities for linking between tiddlers – ideally not just when prompted, but as a background operation.

Right. Dead right.

IMHO, to do the TW thing viably under AI will need a dedicated data set (latest coding, not past) for training.

Overall AI looks dumb for TW right now…

TT

Can you elaborate on what you mean by the TW thing? Are you talking about coding/writing wiki script?

I can assure you that most AI models are perfectly capable of incorporating wiki syntax into their responses

Hi @well-noted have you checked out 🔥 OpenAI functions and tools | LocalAI documentation which offers the possibility to install ai models and is aiming to be to be fully compatible with chatGPT

I’m afraid I’m not sure I fully grok the full implications of this tool - - it essentially allows one to switch between local ollama and openAI API calls?

Yes, and it seems to be a straight forward way to host the local model and create workflows.

….and it gives you the possibility to add a big Corpus of sources to use them in Retrieval Augmented Generation