No prerequisites in terms of non-core plugins as far as I am aware - - once you plug in the API key it should work out of the box. If one is additionally using the streams plugin, the two streams macros that I mentioned should also work, though that would require the user to take the extra step of adding the macro to the context menu template and/or adding a keyboard shortcut to the streams editor template.

Minor update:

$__plugins_NoteStreams_Expanded-Chat-GPT (0.0.83).json (75.3 KB)

Improved chaining of actions and made this capability more explicit to agent

Major release of the Expanded ChatGPT Plugin

$__plugins_NoteStreams_Expanded-Chat-GPT (0.0.9).json (92.9 KB)

If anyone has any problems with this release, I’ll be happy to address. Can report it is working for me on 5.3.5 and 5.3.6 pre release.

Updates in this version:

- Timezone now automatically corresponds to user’s desktop

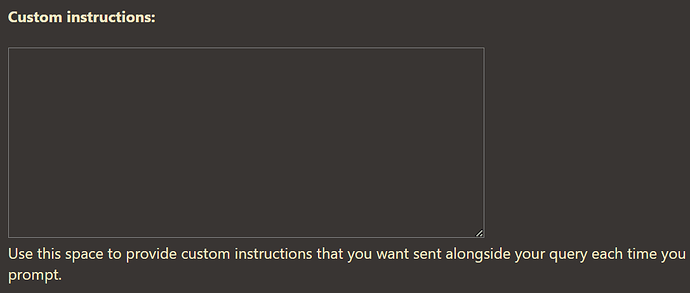

- $:/plugins/Notestreams/Expanded-Chat-GPT/User-instructions tiddler, included in config, allows user to input custom instructions to be sent along with each query

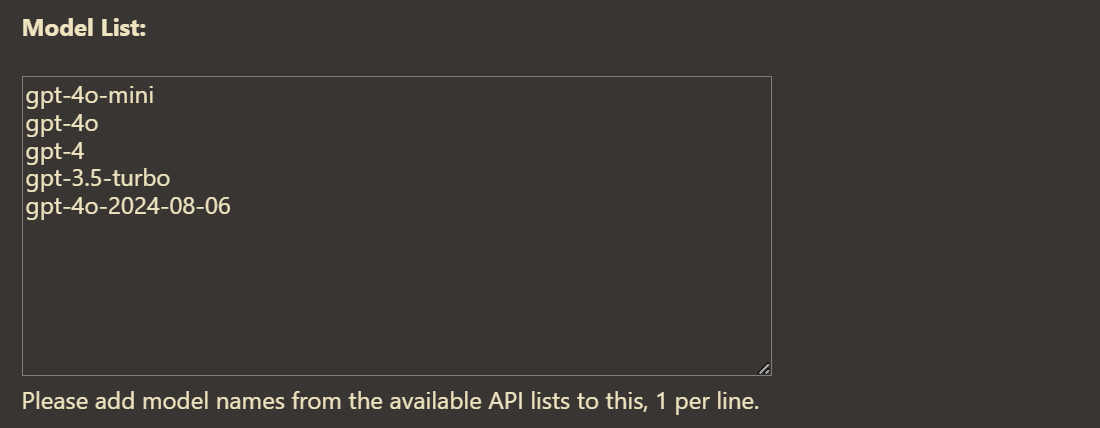

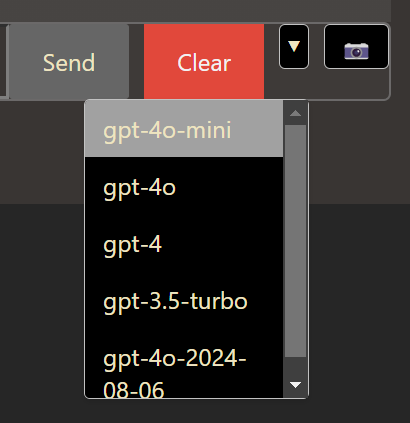

- Model switcher

-

Dropdown menu on interface allows for switching between models

-

Added $:/plugins/Notestreams/Expanded-ChatGPT/model-list tiddler, included in config, which allows user to add or remove any OpenAI models

- Multimodal

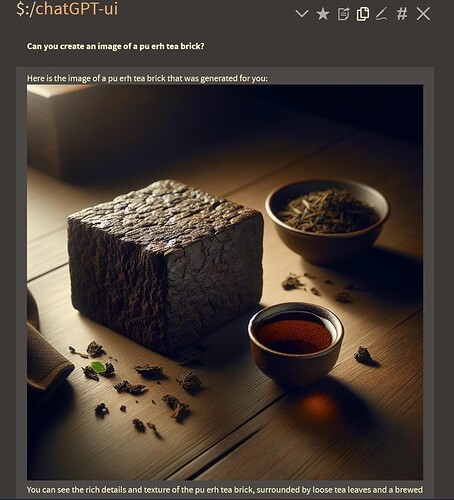

- Agent has been given ability to query dall-e-3 for image generation upon request

-

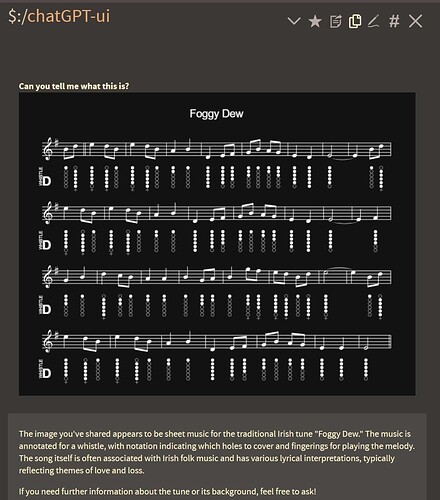

Vision Capabilities

a) Transclusions of image tiddlers are pushed for analysis

b) Upload button allows users to reference image files from system without importing

c) Add listener event for “Paste” which allows images to be uploaded by pasting into chat box

- Custom Instructions allows users to input their own instructions to be pushed with each query

This is kind of a big update, so let’s get into it:

As promised, a space has been provided in the config that allows the user to input custom instructions such as their personal shorthand, which will be sent immediately following the system prompt each time a query is sent.

See above conversation of caching

This should give the user far more control over their outputs, and, in that vein,

a model selection dropdown has also been included in this version. This allows the user to select from any openAI language model that exists currently.

Models can be added or removed from this list from the config menu now, allowing user to select with more detail and consideration.

These are the updates designed to give the user more control over their agent and the outputs they might expect. The default is still gpt-4o-mini, which I have found is excellent for most tasks – however, this will allow the user to switch to gpt-4o or any other model they feel might be better suited for a particular task, and easily switch back without interrupting a conversation.

Now for the fun updates:

As you can see, the agent itself has been given the ability to call for image generation and render those in the conversation history. Rather than the user prompting an image model directly (which I experimented with and decided against) this allows for a much more natural flow of conversation and allows the agent more flexibility.

Additionally, this update includes vision capabilities, which allows the model to recognize images either as transclusions or as uploads from the file system

I don’t really have a way of representing this, but one can also upload files by copying an image and then pasting into the text box.

This can allow for some very useful abilities:

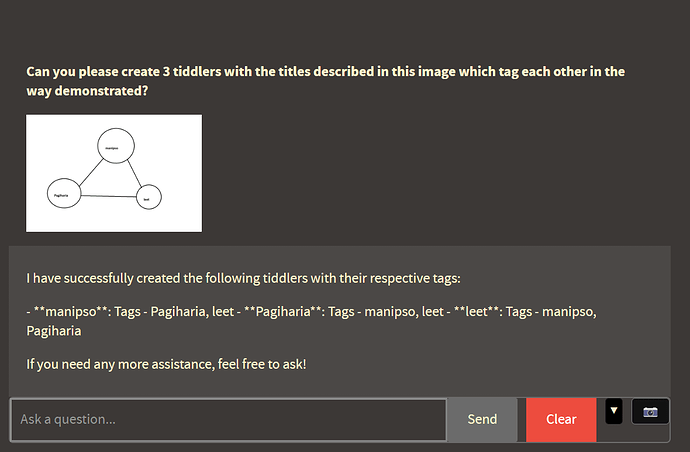

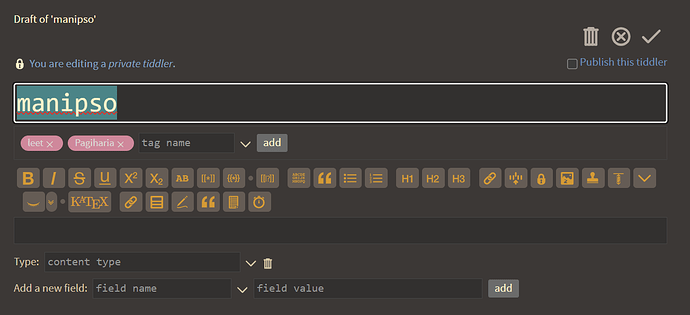

As one can see above, I doodled in paint a nonsense chart – the model was able to accurately depict the relationship described visually through the creation and tagging of original tiddlers.

Even with limited context, this ability performs quite well – additional context should result in more refined results.

And here you can see these two skills being implemented in one query/response block

This is prettymuch as far as I’m planning to go with this phase of the project: As a final step I will be going through the plugin to do some cleanup of the code and maybe add a few fixes and new features for a version which I’ll release here, but wont necessarily be in a hurry to release unless users report a bug or have a request I may have already fixed in the experimental version.

The next most obvious phase I foresee is the multimodal ability to work with audio - - since I think MWS is going to be a major step-forward for me, in terms of incorporating non-tiddler files into my wiki, I am quite comfortable waiting for the official release before focusing too much on this

Cheers!

You may be surprised, but I’m impressed with Custom Instructions. Great idea.

(@CodaCoder thanks for reminding me to add it to the list)

And here I thought the best touch was pasting to upload

And here I thought the best touch was pasting to upload

Mostly I just got tired of opening the JS in order to add new instructions

Minor Fix - 0.9.1:

$__plugins_NoteStreams_Expanded-Chat-GPT (0.9.1).json (95.3 KB)

Realized Transclusion pattern for images was interfering with text, minor fix which distinguishes images from text and, since I was in there, also pushes a stream-list for text tiddlers, if it exists

I know I’m going against what I said only a few days ago, but I’m releasing a not-insignificant upgrade,

-

because of this plugin’s mention in the Tiddlywiki Newsletter likely to bring it more traffic, and

-

because I finally had the agent misunderstand and perform a task that was frustrating enough to undo that I felt it amounted to a “fix” that I could not ignore

$__plugins_NoteStreams_Expanded-Chat-GPT (0.9.3).json (116.9 KB)

The “fix” in question is technically a new feature, but probably deserves to have been included earlier in the process – an undo button will now undo most any action the agent can perform. Just a reminder that this includes Creation and Modifying of tiddlers.

As you can see here, one of the “experimental” features that’s packaged with this update is the ability for the agent to open and close Tiddlers in the story river. Another, which can also be affected by the undo button, is a function for Renaming Tiddlers

Behind the scenes, there’s another improvement that I believe might qualify as a “fix” – the agent must now perform a process verifying its actions before it reports that it has performed them. This eliminates a problem in which the model would either

-

hallucinate that it had performed an action without even attempting to do so, or

-

attempt to perform an action, following the methods for doing so that it has been provided, and reporting that the action had been completed based on the assumption that calling the function was always sufficient for an action to be completed.

This latter point may be a bit confusing, as it’s not unreasonable to assume that functions would consistently perform if they are constructed well – to elaborate, I will use another improvement as an example:

There are occasions in which the agent might be responding to “partial hits” – let’s take the case of “Please add the tag Yolo to all tiddlers with their Generation field value set to Millennial”

-

This type of complex task requires multiple steps which the agent first breaks down into a list performs one at a time.

-

It is very possible in a situation like this that only some of the appropriate changes are performed, even if the model has correctly identified all the changes that need to be made.

-

In such a case, it would be reasonable for the agent to affirm that modification of the tiddlers has taken place, because it is accurately reporting partial successes.

A change to the modifyTiddler function has reinforced the agents ability to perform this kind of multi-step task – Additionally, the verification step makes sure that the agent confirms that the action has actually been performed, attempts a maximum of 3 times if it has not, and then informs the user of the results, rather than arbitrarily reporting.

This is a feature that still very much qualifies as experimental. Validation can become complex quickly and balancing that against wait times and timeouts and retries is all a bit complicated… While it’s definitely stable and safe to use at this point, there’s definitely still refinement I’ll be making to this process before it’s completely seamless.

While I have a few other “fixes” along these lines that I am working on, in addition to UI features and prompt refinements that I would like to make, I feel that it is timely and valuable enough to release this improved version for people to experiment with.

Without going too deeply into those fixes/features, it might be worth mentioning that I have begun with some experimentation that would allow the agent to perform the undo operation however, for the time being, it is incapable of that.

I think the button is a more valuable feature, frankly, but imagine a situation where the agent has performed an incredibly complex operation involving more than a dozen unique tasks in one query. The ability to tell an agent to undo “the last action,” could, effectively, undo dozens of actions at once, rather than requiring the user to sit and click the button dozens of times – a possibility I consider relevant.

Could this Plugin use handwriting recognition in you have a ChatGPT Account?

@JanJo, one will need to have an API key to use this at all, but other than the account associated with that, there is no need for a ChatGPT subscription or anything like that.

If one has that, it will perform handwriting recognition, yes, you can see in Expanded ChatGPT Interface - #12 by well-noted that it has taken an image of my (pretty poor) handwriting and done a solid job of interpreting it and setting the text of the tiddler accordingly.

On a separate note, the validation and verification steps that I am trying to to incorporate for more complex tasks are not quite there yet - - I intend to do further work to refine that, but any suggestions from the group (either specific code or abstract ideas about how the operations would go) are greatly appreciated.

In the meantime, the plugin continues to perform very well and I’ve incorporated it into many of my daily tasks – one should just verify that tasks have been performed correctly, which is much easier since you can prompt the agent to open the tiddler for you to validate, and the undo button works very consistently.

Could the API-Key be hidden to make the function accessible without revealing the Key?

I’m not totally sure what you’re asking, @JanJo, so I will just give some context and you can ask for further clarity if I don’t address your question:

The API key that you get from platform.openai.com is a secret key that you only get once when you sign up for it. From your platform you can create more, but if you lose that key you cannot go back and get it.

If you have this plugin installed in your wiki there is a place to input the API key – that gets saved to the wiki as the text field of a tiddler.

The key is accessible only to those who have access to that tiddler. If you had a publicly available wiki, you would need to find some way to obfuscate and encrypt that tiddler… Although you would probably not want to have this plugin be available for people to use in a way that they could access your key, as each query sent by a user would charge your account (usually less than a penny for a simply query, but still)

It could pretty easily be modified for public use so that a user would have to input their own API key in order to make queries, which would not carry over through saves.

If you have some specific use case in mind, I’d be interested to help work through it with you.

I am a teacher and I would like to build an interface that gives pupils feedback on a handwritten input - so it would have to be my API-Key.

In the first step it should recognize the text and give hints on orthography.

In the second it should evaluate the content.

That’s an interesting usecase (and the first time I’m coming across the word orthography, thank you for that!  )

)

I believe that it would be capable of recognizing misspellings and making suggestions - - I haven’t tested on elementary handwriting, but I imagine it would do a fairly decent job, though further tests would need to be done.

As far as your API key being used, possible misuse could be minimized pretty easily: The OpenAI platform allows you to associate any API key with a specific project title and then set limits on how much can be charged on that project per month. I have not had to reload my credits since throwing in $20 at the beginning of the year, so I think it could be fairly affordable and if you set the limit at say $5, it would be safe to allow students to use the interface with your API key without running the risk of going destitute.

This would protect you even if you had the API key publicly available and were just acting on the basis of trust – if you ever had reason to suspect the API key had been compromised, the damage would be minimal and you could just swap it out.

Another simple process would be creating a new API key for each assignment, that would again have guardrails against severe misuse.

As far as hiding the API key, though, I’m afraid I don’t have too much experience with sharing my wikis with others, and from what I’ve seen around here, the ability to hide or encrypt a tiddler in a sufficient way to prevent malicious intent seems challenging… though perhaps will become less challenging with the new release? (no citation on that, it’s just the general sense I have)

There may be someone who knows more about encryption and hiding tiddler content, however, that could give you more info.

An easy way to make the API key less visible would be to hardcode it within the widget javascript – I don’t know the skill level of the average student, but it’s possible that the key would be sufficiently hidden from most people within the context of a very large codeblock.

But the API key would need to be accessible by the widget somehow… Would be very interested to hear if anyone has a method for extracting information from a source outside the wiki to use within it.

There is an encrypttiddler-plugin by danielo that does a decent job on one or a list of tiddlers.

What about entering a hashed key by a qr-code ?

Great! Then all you would need to do is to encrypt the single api tiddler ( $:/plugins/NoteStreams/expanded-chat-gpt/openai-api-key) and you would be good to go.

Although you should still set up the guardrails in the openAI platform, since a user could still query the agent far more than necessary (Unless maybe you were to also modify the system message to forbid this kind of behavior… that would be interesting to attempt).

Would this be a key the student would input at time of use? If so, the key would have to decryptable for the widget but not decryptable for the student.

My cryptography skills are extremely minimal.

I guess the student should have to authentificate.

I also would love to have an LDAP or OAuth plugin for TW