With the latest release of the Expanded Chat GPT Interface Plugin (Expanded ChatGPT Interface - #11 by well-noted) I wanted to open a discussion about the possibilities for incorporating machine-learning (tldr, AI) into Tiddlywiki. I’ll give some context here for what that plugin is capable of, but if you want a full overview of that plugin, or you have thoughts, questions, or recommendations about it specifically, I recommend checking out and posting on that topic.

That said, I haven’t heard much about other community members needs, interests, and activities with ML, so please share those here

Several years back I became interested in using NLP to process all of my reading notes into my wiki, when I came across ML. With the recent spread of widely available GPT models, my vision for that has come true, allowing me to spend more time reading and interacting with my notes and less time processing them:

Notes as they are exported from my Boox tablet

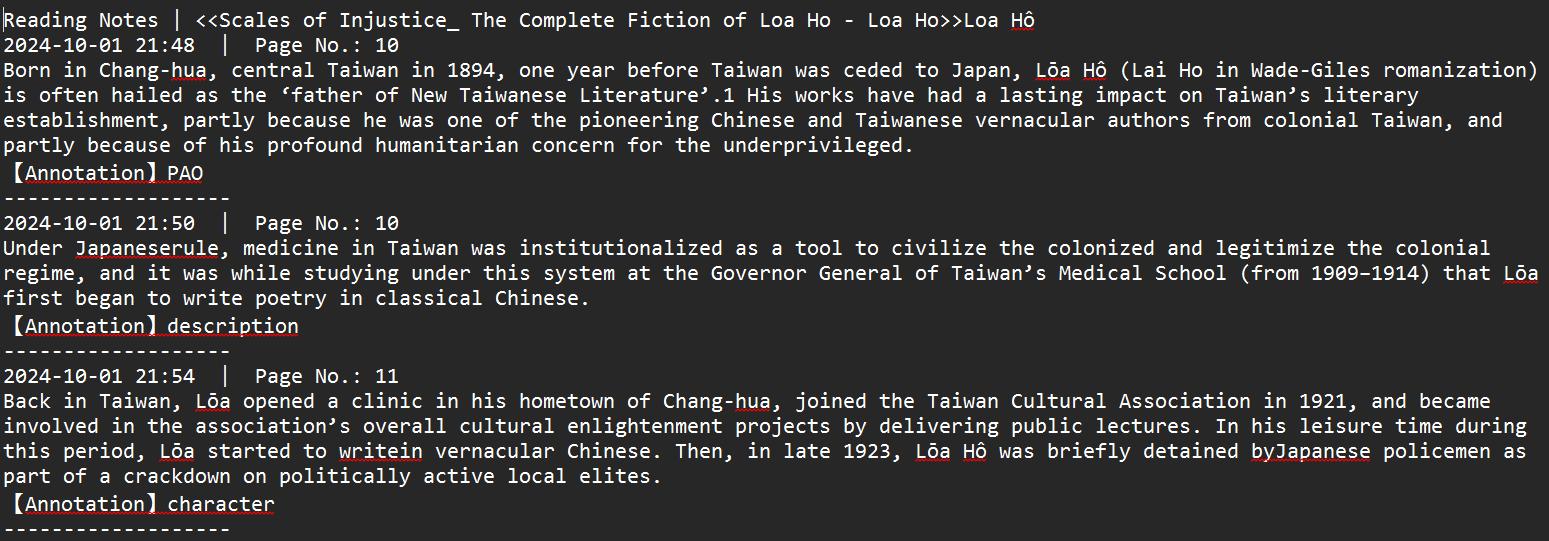

Notes after they are passed through a python-script which uses an OpenAI api call to process all these notes into my specific format, to be imported into Tiddlywiki

More recently, as you can see in the above post, I was inspired to look a bit more into more-direct implementations of ML within the tiddlywiki environment.

An example of the text editing capabilities of ML when incorporated into TW

An example of text-completion capabilities of ML, within the Streams plugin

To my eye, these are neat tricks that might allow someone to use shorthand for rapid note entry which would be reformatted later or allow someone to speed up their drafting process.

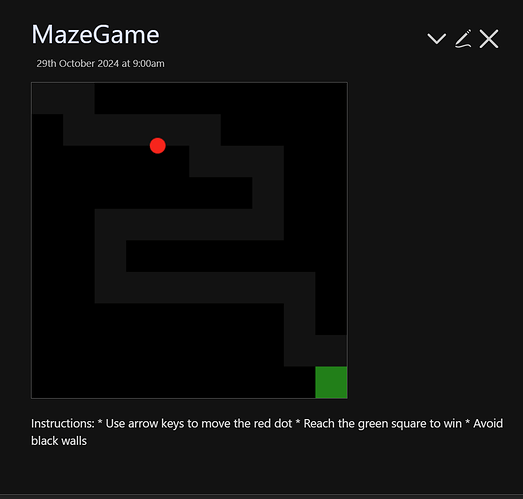

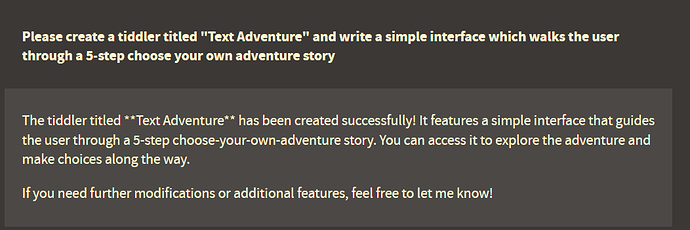

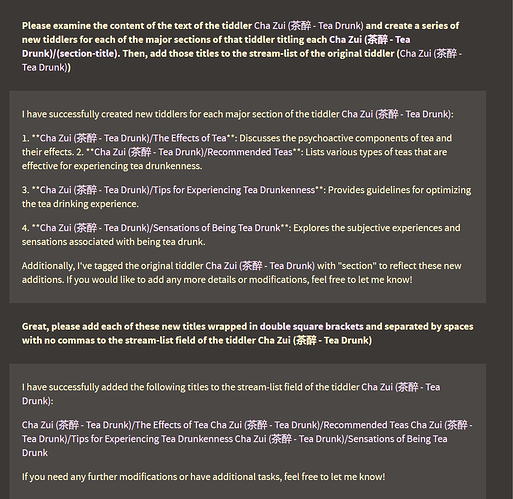

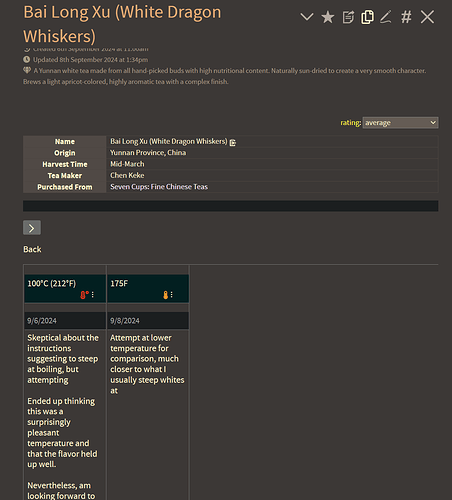

Moreover, ML can be incredibly useful in completing otherwise cumbersome tasks:

Demonstration of complex multi-stage contextual operations performed rapidly with natural language quer

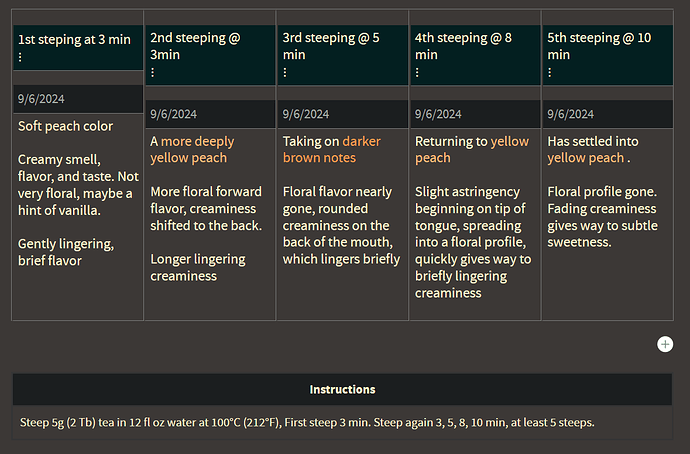

Demonstration of rapid transformation of raw data into a formatted table, on mobile interface

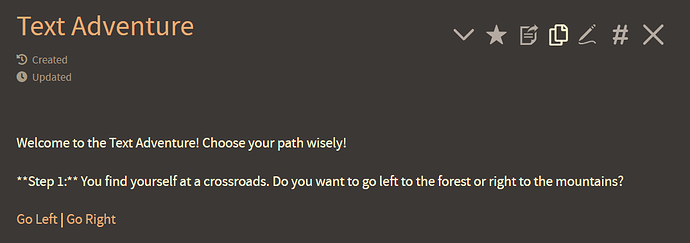

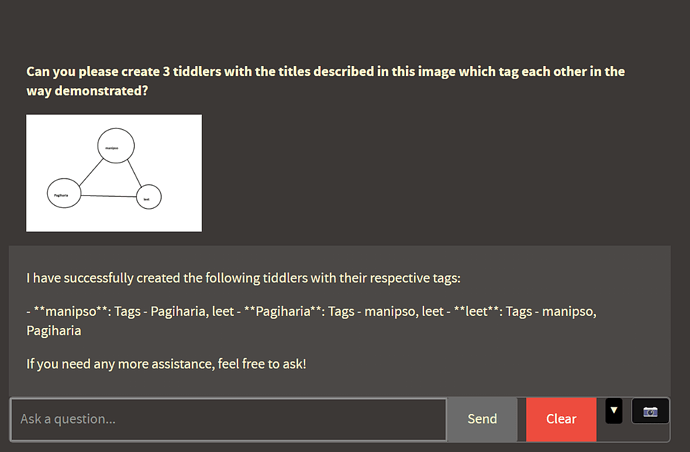

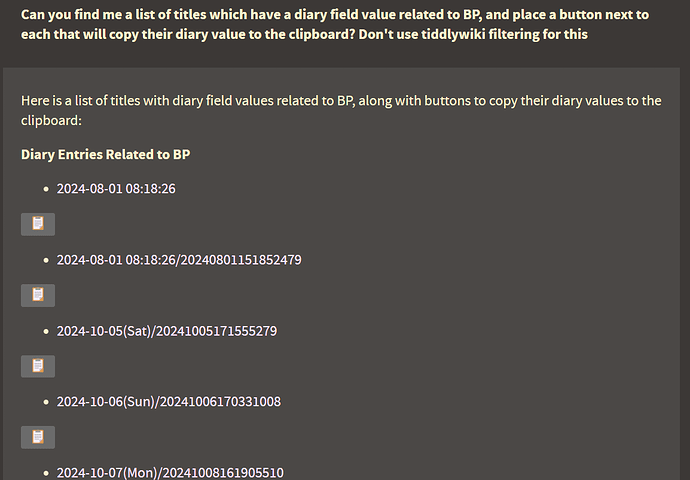

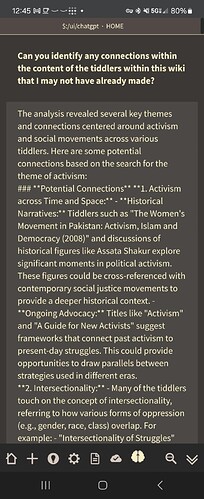

For me, the possibilities of incorporating ML into the TW environment really shine as an extension of what TW already does spectacularly well – categorizing and filtering bits of information to create relationships:

Demonstration of the ability to perform a complex search operation and provide user with functional buttons to enhance workflow

Create original connections between tiddlers to better structure information

Additionally, I will often find myself in the middle of performing one task (maybe reading an article while waiting in line) and come across some information I’d like noted but done have time to process completely:

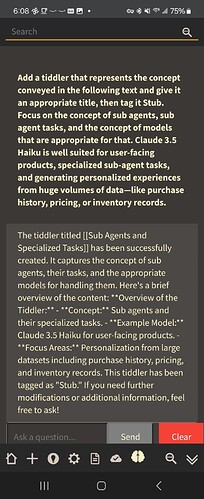

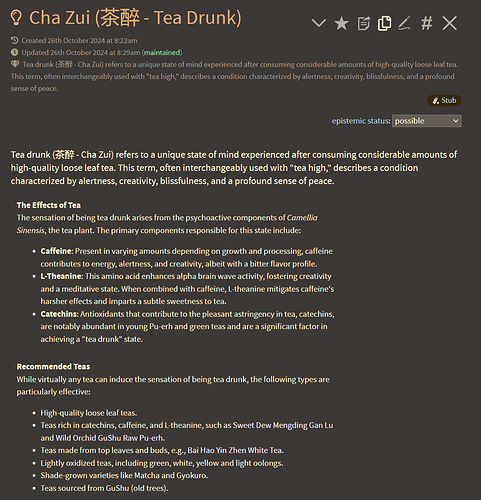

Having come across some information, creating spontaneous stubs by copying/pasting the context into the interface, to be be revisited later

This is also helpful when trying to capture stray thoughts that I have, which I may not have time to fully articulate at any moment:

I want to hear more of the community’s thoughts, experiences, and visions for incorporating these these two tools.

in agreement with you on that point, it is one of my top priorities. The current version of the Expanded Chat-GPT plugin is capable of that - - it can scan the content of all the tiddlers in your wiki and come up with a set of conclusions and instructions for it’s own reference about how you write, and it can use reference files in order to influence how it responds: I just need to do some strategic prompting and restructuring of the existing widgets to make that the default.

in agreement with you on that point, it is one of my top priorities. The current version of the Expanded Chat-GPT plugin is capable of that - - it can scan the content of all the tiddlers in your wiki and come up with a set of conclusions and instructions for it’s own reference about how you write, and it can use reference files in order to influence how it responds: I just need to do some strategic prompting and restructuring of the existing widgets to make that the default.

🤌

🤌