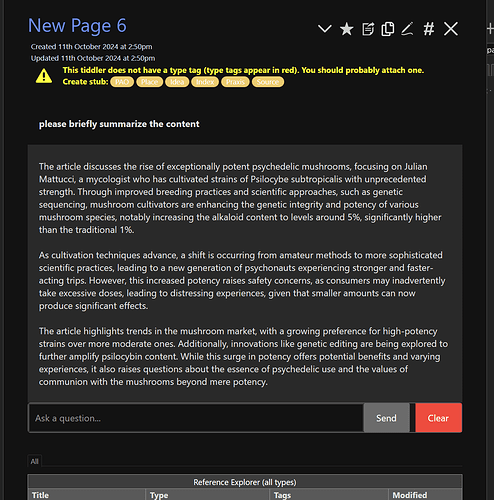

That version includes conversation memory, by the way, so you can have an ongoing conversation (though the memory currently only persists as long as the tiddler is open)

Here is a version of the code which additionally adds the ability to pass a tiddler title through for review:

"use strict";

var import_widget = require("$:/core/modules/widgets/widget.js");

const CHAT_COMPLETION_URL = "https://api.openai.com/v1/chat/completions";

class ChatGPTWidget extends import_widget.widget {

constructor() {

super(...arguments);

this.containerNodeTag = "div";

this.containerNodeClass = "";

this.tmpHistoryTiddler = "$:/temp/Gk0Wk/ChatGPT/history-" + Date.now();

this.historyTiddler = this.tmpHistoryTiddler;

this.chatButtonText = $tw.wiki.getTiddlerText("$:/core/images/add-comment");

this.scroll = false;

this.readonly = false;

this.chatGPTOptions = {};

this.systemMessage = "";

}

initialise(parseTreeNode, options) {

super.initialise(parseTreeNode, options);

this.computeAttributes();

}

execute() {

this.containerNodeTag = this.getAttribute("component", "div");

this.containerNodeClass = this.getAttribute("className", "");

this.historyTiddler = this.getAttribute("history", "") || this.tmpHistoryTiddler;

this.scroll = this.getAttribute("scroll", "").toLowerCase() === "yes";

this.readonly = this.getAttribute("readonly", "").toLowerCase() === "yes";

this.tiddlerTitle = this.getAttribute("tiddlerTitle", ""); // New attribute

const temperature = Number(this.getAttribute("temperature"));

const top_p = Number(this.getAttribute("top_p"));

const max_tokens = parseInt(this.getAttribute("max_tokens"), 10);

const presence_penalty = Number(this.getAttribute("presence_penalty"));

const frequency_penalty = Number(this.getAttribute("frequency_penalty"));

this.chatGPTOptions = {

model: this.getAttribute("model", "gpt-4o-mini"),

temperature: temperature >= 0 && temperature <= 2 ? temperature : undefined,

top_p: top_p >= 0 && top_p <= 1 ? top_p : undefined,

max_tokens: Number.isSafeInteger(max_tokens) && max_tokens > 0 ? max_tokens : undefined,

presence_penalty: presence_penalty >= -2 && presence_penalty <= 2 ? presence_penalty : undefined,

frequency_penalty: frequency_penalty >= -2 && frequency_penalty <= 2 ? frequency_penalty : undefined,

user: this.getAttribute("user")

};

this.systemMessage = this.getAttribute("system_message", "");

}

render(parent, nextSibling) {

if (!$tw.browser) return;

this.parentDomNode = parent;

this.computeAttributes();

this.execute();

const container = $tw.utils.domMaker(this.containerNodeTag, {

class: "gk0wk-chatgpt-container " + this.containerNodeClass

});

try {

const conversationsContainer = $tw.utils.domMaker("div", {

class: this.scroll ? "conversations-scroll" : "conversations"

});

container.appendChild(conversationsContainer);

if (!this.readonly) {

const chatBox = this.createChatBox(conversationsContainer);

container.appendChild(chatBox);

}

this.renderHistory(conversationsContainer);

} catch (error) {

console.error(error);

container.textContent = String(error);

}

if (this.domNodes.length === 0) {

parent.insertBefore(container, nextSibling);

this.domNodes.push(container);

} else {

this.refreshSelf();

}

}

createChatBox(conversationsContainer) {

const chatBox = $tw.utils.domMaker("div", { class: "chat-box" });

const input = $tw.utils.domMaker("input", {

class: "chat-input",

attributes: {

type: "text",

placeholder: "Ask a question..."

}

});

const button = $tw.utils.domMaker("button", {

class: "chat-button",

text: "Send",

style: {

height: "50px",

minWidth: "80px",

fontSize: "16px",

padding: "0 15px"

}

});

chatBox.appendChild(input);

chatBox.appendChild(button);

const clearButton = $tw.utils.domMaker("button", {

class: "chat-button clear-history",

text: "Clear",

style: {

height: "50px",

minWidth: "80px",

fontSize: "16px",

marginLeft: "10px",

padding: "0 15px",

backgroundColor: "#f44336",

color: "white",

border: "none",

cursor: "pointer"

}

});

clearButton.onclick = () => {

this.clearChatHistory(conversationsContainer);

};

chatBox.appendChild(clearButton);

let isProcessing = false;

const sendMessage = async () => {

if (isProcessing) return;

const apiKey = $tw.wiki.getTiddlerText("$:/plugins/Gk0Wk/chat-gpt/openai-api-key", "").trim();

if (!apiKey) {

alert("Please set your OpenAI API key in the plugin settings.");

return;

}

let prompt = input.value.trim();

if (!prompt) return;

let fullMessage = prompt;

// Fetch the tiddler content if a tiddler title is provided

if (this.tiddlerTitle) {

const tiddlerContent = $tw.wiki.getTiddlerText(this.tiddlerTitle, "");

if (tiddlerContent) {

fullMessage = `${prompt}\n\n${tiddlerContent}`;

}

}

input.value = "";

isProcessing = true;

button.disabled = true;

const conversation = this.createConversationElement(prompt);

conversationsContainer.appendChild(conversation);

try {

await this.fetchChatGPTResponse(apiKey, fullMessage, conversation);

this.saveConversationHistory(prompt, conversation.querySelector(".chatgpt-conversation-assistant").innerHTML);

} catch (error) {

console.error(error);

this.showError(conversation, error.message);

} finally {

isProcessing = false;

button.disabled = false;

}

};

button.onclick = sendMessage;

input.addEventListener("keydown", (event) => {

if (event.key === "Enter" && !event.shiftKey) {

event.preventDefault();

sendMessage();

}

});

return chatBox;

}

async fetchChatGPTResponse(apiKey, message, conversationElement) {

const assistantMessageElement = conversationElement.querySelector(".chatgpt-conversation-assistant");

const messages = [];

if (this.systemMessage) {

messages.push({ role: "system", content: this.systemMessage });

}

messages.push({ role: "user", content: message });

const response = await fetch(CHAT_COMPLETION_URL, {

method: "POST",

headers: {

"Authorization": `Bearer ${apiKey}`,

"Content-Type": "application/json"

},

body: JSON.stringify({

...this.chatGPTOptions,

messages: messages,

stream: true

})

});

if (!response.ok) {

throw new Error(`HTTP error! status: ${response.status}`);

}

const reader = response.body.getReader();

const decoder = new TextDecoder("utf-8");

let buffer = "";

let fullResponse = "";

while (true) {

const { done, value } = await reader.read();

if (done) break;

buffer += decoder.decode(value, { stream: true });

const lines = buffer.split("\n");

buffer = lines.pop();

for (const line of lines) {

if (line.startsWith("data: ")) {

const data = line.slice(6);

if (data === "[DONE]") {

return;

}

try {

const parsed = JSON.parse(data);

const content = parsed.choices[0].delta.content || "";

fullResponse += content;

assistantMessageElement.innerHTML = this.renderMarkdownWithLineBreaks(fullResponse);

} catch (error) {

console.error("Error parsing SSE message:", error);

}

}

}

}

// Handle any remaining buffer

if (buffer.length > 0) {

try {

const parsed = JSON.parse(buffer);

const content = parsed.choices[0].delta.content || "";

fullResponse += content;

assistantMessageElement.innerHTML = this.renderMarkdownWithLineBreaks(fullResponse);

} catch (error) {

console.error("Error parsing final buffer:", error);

}

}

this.saveConversationHistory(message, fullResponse);

}

renderMarkdownWithLineBreaks(text) {

// Replace newlines with <br> tags, but preserve code blocks

const codeBlockRegex = /```[\s\S]*?```/g;

const codeBlocks = text.match(codeBlockRegex) || [];

let index = 0;

let result = text.replace(codeBlockRegex, () => `__CODE_BLOCK_${index++}__`);

// Replace newlines with <br> tags outside of code blocks

result = result.replace(/\n/g, '<br>');

// Restore code blocks

codeBlocks.forEach((block, i) => {

result = result.replace(`__CODE_BLOCK_${i}__`, block);

});

// Use TiddlyWiki's built-in markdown parser

return $tw.wiki.renderText("text/html", "text/x-markdown", result);

}

createConversationElement(message) {

const conversation = $tw.utils.domMaker("div", { class: "chatgpt-conversation" });

conversation.appendChild($tw.utils.domMaker("div", {

class: "chatgpt-conversation-message chatgpt-conversation-user",

children: [$tw.utils.domMaker("p", { text: message })]

}));

conversation.appendChild($tw.utils.domMaker("div", {

class: "chatgpt-conversation-message chatgpt-conversation-assistant"

}));

return conversation;

}

showError(conversationElement, errorMessage) {

const errorElement = $tw.utils.domMaker("div", {

class: "chatgpt-conversation-error",

children: [

$tw.utils.domMaker("p", { text: "Error: " + errorMessage })

]

});

conversationElement.querySelector(".chatgpt-conversation-assistant").appendChild(errorElement);

}

saveConversationHistory(userMessage, assistantMessage) {

let history = [];

try {

history = JSON.parse($tw.wiki.getTiddlerText(this.historyTiddler) || "[]");

} catch (error) {

console.error("Error parsing conversation history:", error);

}

history.push({

id: Date.now().toString(),

created: Date.now(),

user: userMessage,

assistant: assistantMessage

});

$tw.wiki.addTiddler(new $tw.Tiddler({

title: this.historyTiddler,

text: JSON.stringify(history)

}));

// Re-render the conversation

const conversationsContainer = this.domNodes[0].querySelector(".conversations, .conversations-scroll");

conversationsContainer.innerHTML = '';

this.renderHistory(conversationsContainer);

}

renderHistory(container) {

let history = [];

try {

history = JSON.parse($tw.wiki.getTiddlerText(this.historyTiddler) || "[]");

} catch (error) {

console.error("Error parsing conversation history:", error);

}

for (const entry of history) {

const conversation = this.createConversationElement(entry.user);

conversation.querySelector(".chatgpt-conversation-assistant").innerHTML = entry.assistant;

container.appendChild(conversation);

}

}

clearChatHistory(conversationsContainer) {

$tw.wiki.deleteTiddler(this.historyTiddler);

conversationsContainer.innerHTML = '';

}

refresh(changedTiddlers) {

const changedAttributes = this.computeAttributes();

if (Object.keys(changedAttributes).length > 0 || this.historyTiddler in changedTiddlers) {

this.refreshSelf();

return true;

}

return false;

}

}

exports["chat-gpt"] = ChatGPTWidget;

Call with <$chat-gpt tiddlerTitle="test-operation" />

example:

Please note, in both of these versions I have selected gpt-4o-mini as the model, since it is the most affordable (and quite capable, in my experience)

I believe a dropdown menu to select from models would be an excellent future feature, though you can change it manually for the time being.

If you would like to reference a tiddler that includes transclusions, for example, you would want to make a small adjustment to the code:

const sendMessage = async () => {

if (isProcessing) return;

const apiKey = $tw.wiki.getTiddlerText("$:/plugins/Gk0Wk/chat-gpt/openai-api-key", "").trim();

if (!apiKey) {

alert("Please set your OpenAI API key in the plugin settings.");

return;

}

let prompt = input.value.trim();

if (!prompt) return;

let fullMessage = prompt;

// Fetch and wikify the tiddler content if a tiddler title is provided

if (this.tiddlerTitle) {

const tiddlerContent = $tw.wiki.renderTiddler("text/plain", this.tiddlerTitle);

if (tiddlerContent) {

fullMessage = `${prompt}\n\n${tiddlerContent}`;

}

}

input.value = "";

isProcessing = true;

button.disabled = true;

const conversation = this.createConversationElement(prompt);

conversationsContainer.appendChild(conversation);

try {

await this.fetchChatGPTResponse(apiKey, fullMessage, conversation);

this.saveConversationHistory(prompt, conversation.querySelector(".chatgpt-conversation-assistant").innerHTML);

} catch (error) {

console.error(error);

this.showError(conversation, error.message);

} finally {

isProcessing = false;

button.disabled = false;

}

};