Announcing the release of the improved version of the Expanded ChatGPT Plugin

$__plugins_NoteStreams_Expanded-Chat-GPT (.8).json (73.2 KB)

Updates in this version:

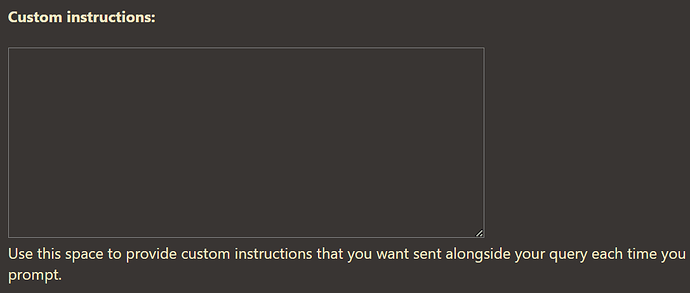

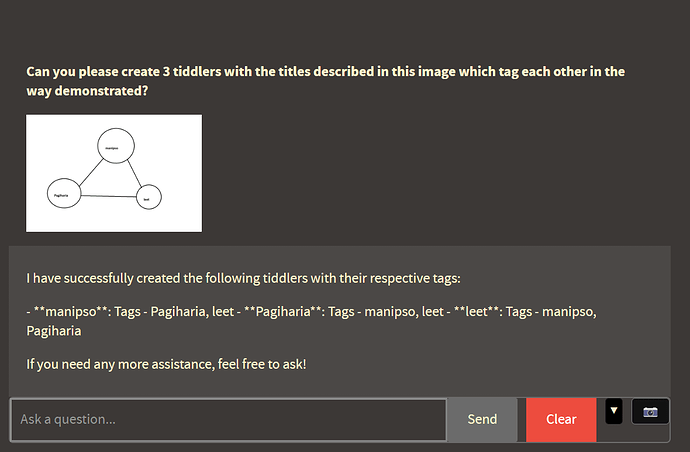

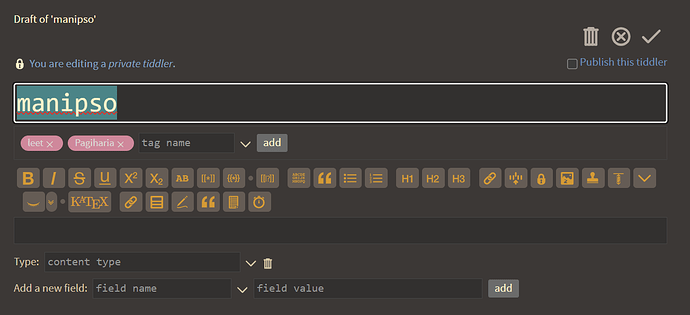

- Wikified text: Chat responses in this version are wikified to include internal links and tiddlywiki features

- Increased Context: Agent has been given more context for what it means to be a Tiddlywiki agent, and has been given explicit understanding of how to perform tiddlywiki operations including filtering, tables, lists, etc.

-

Additional Macro: Clarify Text:

<<clarify-text>>macro which takes the content of the storyTiddler and provides a pass at editing the content -

Additional Macro: Text Completion:

<<yadda-yadda>>macro which takes the content of the storyTiddler and appends the text with additional text to complete the sentence logically.

In addition to these macros, there are streams-specific versions <<clarify-text-streams>> and <<yadda-yadda-streams>> which perform roughly the same operation within the context of a Streams edit box and which can be added as an actionMacro to the streams context menu or can be included as a keyboard trigger (as demonstrated below)

Will be opening up a discussion about these and future ways of incorporating ML into Tiddlywiki going forward, but want here to briefly demonstrate some of these abilities:

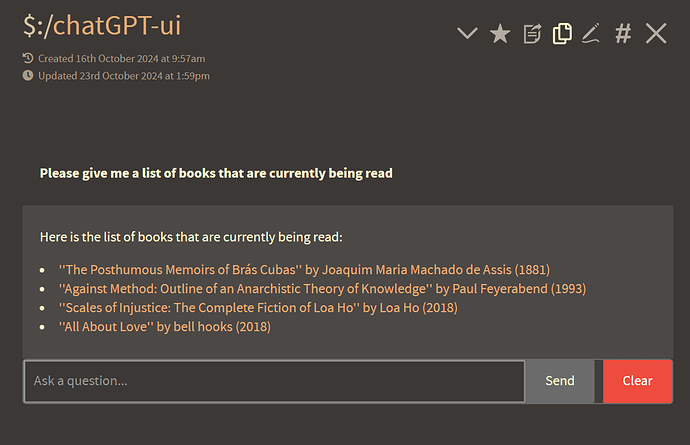

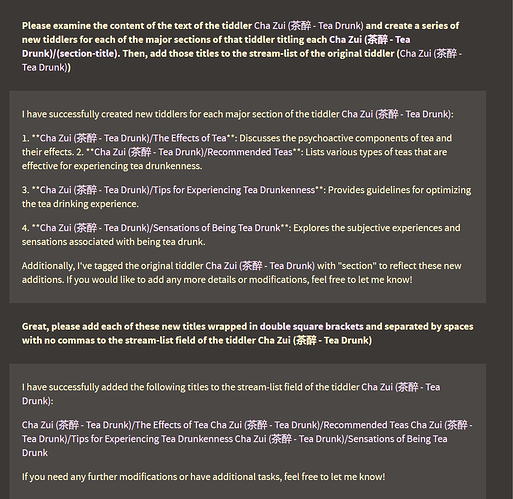

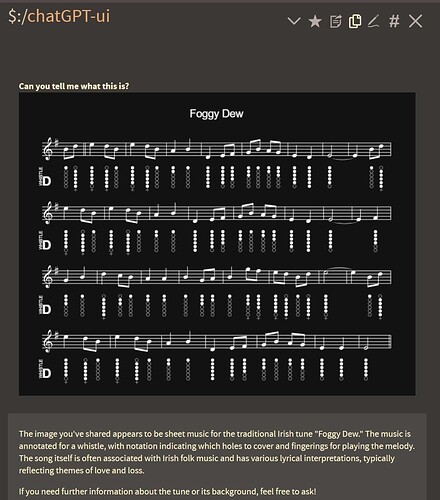

Here you can see both a demonstration of the ability to perform a filtered list operation, and also to provide that list as links

And a demonstration of how the model can split large amounts of content into multiple tiddlers with contextual naming schemes

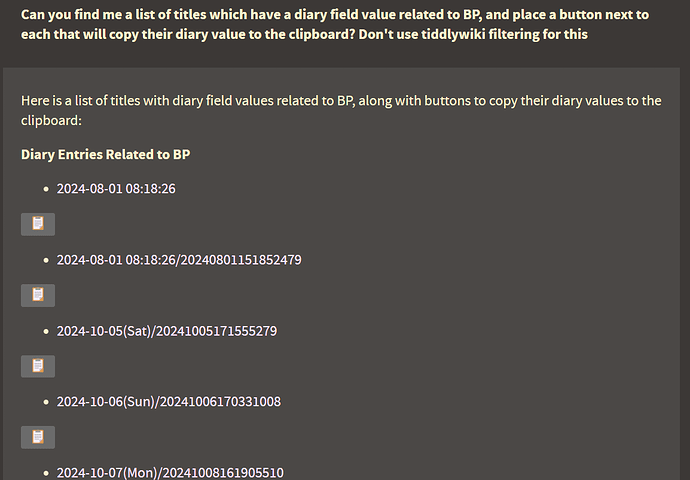

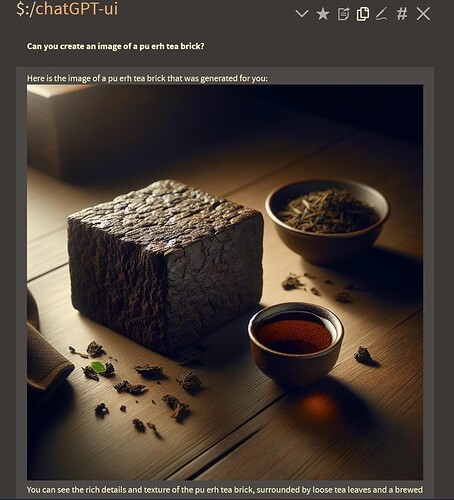

Here you can see the ability to combine a contextual search with the creation of functional buttons in its answer

From raw data can also produce logically formatted tables. Not seen here, the user can prompt the agent to provide the wikitext used to generate the table, and copy/paste that to use the table anywhere

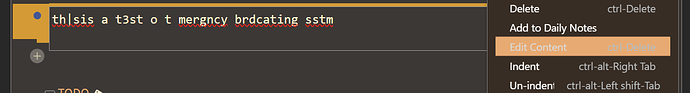

A basic implementation of the Streams plugin, using the context menu

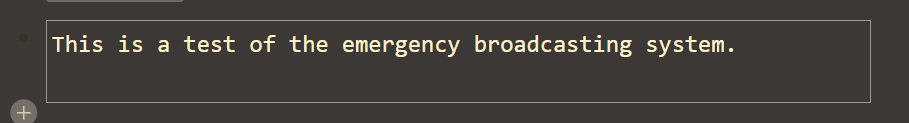

A keyboard-activated implementation of the streams-specific plugin <<yadda-yadda-streams>>

A demonstration of the <<yadda-yadda>> macro when implemented as (packaged) button from the edit box

A demonstration of the clarify text function implemented as a (also packaged) button from the edit box

Will post some more soon about ideas for future development, and some of the ways that these new features could be implemented functionally into one’s workflow.

All earlier terms and conditions apply

-

it appears stable on multiple tests I’ve run and so far I’ve not had it make any major overwrites (though it certainly has the capability!) so please always back up.

-

Works (for me) on the current version (5.35) and the prerelease of (5.36) running MWS

-

A brief word of warning about the new macros – if you call them directly in a tiddler, or do not properly

"""<<encapsulate>>"""the action within its trigger, the macro will trigger immediately and repeatedly and you will be unable to delete that tiddler from the dropdown menu (because it will be constantly updating), requiring you to perform an operation to delete it.

And here I thought the best touch was pasting to upload

And here I thought the best touch was pasting to upload

)

)