Hi, with a very large TW knowledge base two things continually occupy my mind.

-

How to make sure some tiddlers don’t rot on the bottom of the pond by having a method of signaling a review is required at intervals of N days - this resulted in my review plugin which I have been using for a while now.

-

Recognising that the value of a tiddler, an item of knowledge in my case is not always accurately indicated by having a short review interval, I may for instance remember the content of a very valuable tiddler reliably and so not need to review it periodically.

-

Always mindful of what I call value inflation - it’s easy to get excited about a new or recently reviewed item and assign it a high value, then the next exciting thing comes along and so that one is given an even higher value and before you know it there is too much clustering around the ‘high score’ values. An analogy is that sports and dance judges need to be careful awarding tens because they have no-where else to go when someone even better than the best so far comes along. On this note my review tiddlers plugin now has an anti-inflation device in that there are options to increase or decrease the review interval for all tiddlers by one day, typically I increase by one day ( in a range 1 to 100 days ) and so this works in cycles with the inevitable inflation which tends to shorter review intervals - it is in effect a counter inflation device.

So anyway I have started ( well almost completed work ) on a new ratings plugin. I wanted a fine granularity and so the rating is between [1 and 100], 100 represents the most valuable tiddlers.

Here are some screen shots, they all show the rating plugin working along side the existing review tiddlers plugin.

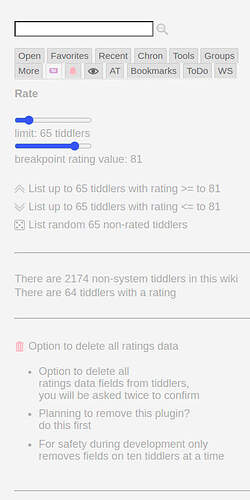

The first slider controls how many rated tiddlers will appear on the storyriver - call this value ‘N’

The second slider controls what I am calling the breakpoint rating value - call this value ‘B’

The upwards point double chevron will fill the story river with N tiddlers with rating value greater or equal to B, they will be ordered down the story river by decreasing rating value BUT if there are more than N tiddlers satisfying this criteria then the list will be truncated at the high value end. Put simply if you ask for tiddlers with a rating value of 85 or more and some actually have the value 85 then these will appear at the end of the storyriver and the highest value tiddlers will be omitted if necessary.

The downwards pointing double chevron shows tiddlers down the storyriver with the first ones having rating closest but not more than B.

The overall pattern is therefore to think of all rated tiddlers ordered by rating value with the highest at the top, select a breakpoint value and then go up or down from there N tiddlers.

It’s designed that way to avoid having two sliders to indicate a range and also because my other review plugin has a similar slider for N, I wanted consistency but I didn’t want three sliders in all.

The display a randomly selected list of non-rated button is simply a way for me to periodically examine which tiddlers should ideally join the ‘rating pool’.

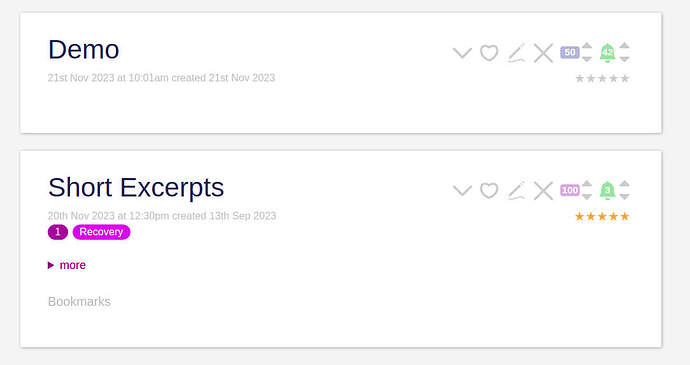

The above image shows a couple of tiddlers with the rating icon shown, it displays the rating value, in this case 100 and 50 and shows how the colour of the icon changes with the rating value. The inc and dec buttons are used to increase or decrease the rating value.

If the tiddler has no rating yet then the rating icon appears ghosted-grey minus the inc-dec buttons, the inc-dec buttons only appear when it is rated which is achieved simply by clicking on the rating icon, then it gets a default value of 50 and the inc-dec buttons appear.

The green bell icon is part of the review plugin and has similar controls.

If anyone else with a need to manage a large number of tiddlers, knowledge base or other then I can post the plugin here, the first version is working, I am just refining doc and so on.

I will probably need to add the usual anti-inflationary measure, in this case functionality to increase or decrease the rating of all rated tiddlers by one but staying in the range [1 to 100] of course.

I have only been using the new ratings plugin for a few days but already I can see it works for me slightly differently to the review plugin - they are different aspects of the same fundamental need to somehow rank over 2000 tiddlers, some book chapter length (OCR scans). It is somehow more meaningful in some situations to fill the storyriver with say 100 tiddlers ( all but the shortest have a ‘reveal more’ mechanism to minimise screen space) and then to say “Hmmm that one appears above this one, do I really think this is the correct importance ranking” and perform the relevent promotion-demotion swap, somehow that doesn’t work with review interval as well for reasons already stated.