If you haven’t yet seen it in this forum, you should know that I’m a big sceptic of the current crop of “AI”.

When it comes to writing code, I’m already seeing the result from our recent hires at GigantiCorp… and from some more experienced developers who really should know better.

We’re getting a lot of code that works for 75 - 90% of the scenarios it has to cover, and falls down miserably for the rest. Much of this is created by generative AI tools. The problem is that when the AI-user doesn’t understand the generated code.

You offer tests as a solution. The trouble is, how do you write good tests if you don’t understand the possibilities in great detail?

I know of only two ways to write good tests. One is to do Test-Driven Development (TDD), writing the code alongside the tests, adding more and more sophisticated tests as you notice potential weaknesses in the code. The other is to do Property-based Testing (PBT), which lets you dictate generic properties which the code must pass and then allow a random input-generator to sniff around the expected inputs and many edge cases.

TDD requires you to understand the code in detail. PBT requires you to understand the data domain in detail. The first won’t work if you’re using generative AI to do anything besides offering a very rough first draft. The second might work, but if you already have such a detailed understanding of the model, and you know the code techniques to implement it, then why bother with the AI at all? If you don’t know the code techniques, then who will maintain that code?

I would rather not be negative. But I can see no good coming out of generative AI, except in the very narrow range of providing a list of options for further considerations.

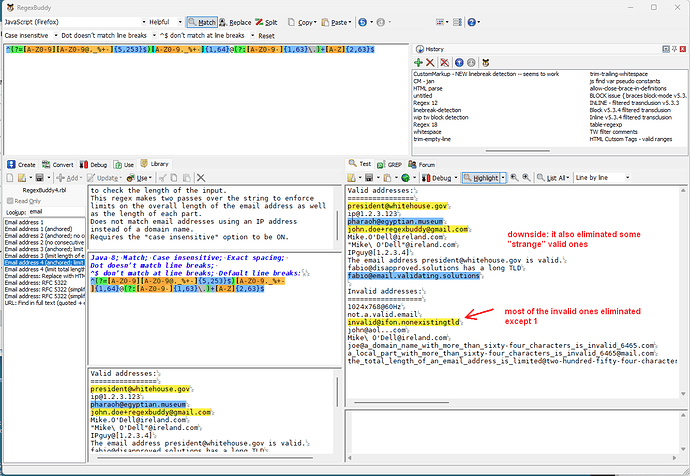

For this specific case, do you have any sense of what sorts of legal email addresses it will reject? (There are many!) Do you have any sense of what sorts of illegal email address it will accept? (As far as I can tell, only those with parts too long, but I haven’t looked closely.)