I think it’s possible, it’s definitely something I’ve been considering, but I wanted to finish cleaning up the videoparser, incorporating some of the things I’d learned from building out the audioparser.

The floating player was actually inspired as a mobile feature, as I sometimes need to pause and skip back and forth when taking a note, and within that context:

-

it works fairly well, but I find it doesn’t consistently play flawlessly well with all screen interactions (opening context menus in streams, having keyboard open, etc).

-

I also think I will not have scrubbing through in the floating window, since (at least on android) those capabilities should be in the notification window

Dragging was an afterthought once I saw how well it seemed to work on desktop, when testing – and, I agree with your assessment that, in that context, scrubbing would be useful as well.

I think you may be right, the state mechanism and the qualify macro are both areas I could use more work in, so perhaps this is the time – it is also a bit more complex, which is why I went with field values initially, but perhaps you could help me work through this conceptually?

-

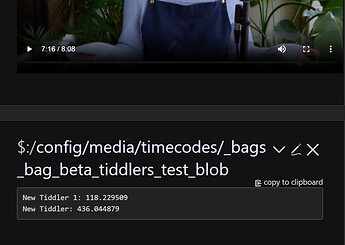

The state would need to be unique both to the source and the context, i.e.

$:/state/src.instance -

Notes that are made referencing the “current time” would need to capture that from the state tiddler… so we would need (in plain language, to start) a way to describe the relationship between the context in which that

create new notebutton appears and the$:/state- - so conceptually, is there a way to do that within a template that audio tiddlers are passed through, or would thiscreate new notebutton need to be a part of the audio parser -

Would you agree that it would be appropriate for timecodes in notes to be field values, since they are not “states” but are static values (or ranges?) In which case, how should the time be called?

- in the context of the

$:/state/src.instance, which would directly tie the tiddler to the instance (and context) of the material in which it was generated, - the src itself, which would require altering the state for the src before calling it,

- within the context of the note itself, i.e, an embedded player in each note (not my favorite solution, but may work if we have multiple template we’re passing through, or

- some completely different thing I haven’t considered yet?

)

)