EDIT: found a relevant github issue: Please GZip $:/core, maybe? · Issue #4262 · Jermolene/TiddlyWiki5 · GitHub

Compression Streams are supported on all browsers since may 9 2023.

We can use this to de/compress data using the gzip algorithm with a few lines of javascript:

async function compress(blob) {

const cs = await blob.stream().pipeThrough(new CompressionStream("gzip"));

return await new Response(cs).blob();

}

async function decompress(blob) {

const ds = await blob.stream().pipeThrough(new DecompressionStream("gzip"));

return await new Response(ds).blob();

}

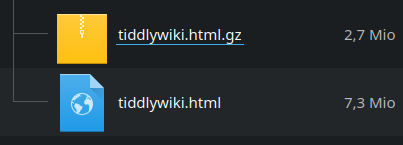

I did a quick test, empty.html goes from 2.4Mo to 0.431Mo (about 80% smaller).

You can test this here: Download compressed data

I think adding support for gzip would be great for backups and plugin library.