I’m normally too nervous to post my small findings as I’m an amateur that often doesn’t do things the most optimally, and this discovery may not be news to the super users either. But in my time of searching how to optimize render and refresh times in my beefier projects, I’ve never found anything that covered this, and it made a huge difference by several orders of magnitude, so I’m making a post about it in case it helps others - or if it could be improved further.

I noticed the lag problem in my current pet project, BlinkieWiki. It deals with a large number of tiddlers (as of writing this, just over 20,000) containing web graphics that have complex metadata. The website has a search interface for filtering graphics through these different kinds of data, such as primary color, keywords, and creator.

The primary filter expression used to determine which graphics to display used the filter operator to reference a field value containing additional filter expressions, which at the time was necessary due to these values being toggle-able and liable to change on the fly (such as whether the graphic has certain color tags, or if it should filter out certain kinds of content or not). However, the render time for displaying the graphics for every time the search query was edited (with throttled refresh factored in) could take as long as 10 seconds, and that was unacceptable.

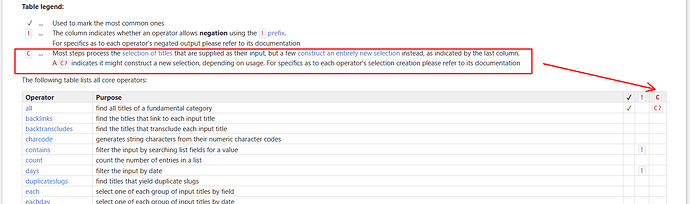

In experimenting with what was causing the lag, I figured out that the overwhelming culprit was the filter operator. Without it, it was orders of magnitude faster, even with the same amount of input tiddlers. But I still needed to use the toggle-able filter expressions. Where my first setup had been this (simplified for the example):

<$list filter="[all[tiddlers]tag[webgraphic]search:keywords{!!keywords}filter{!!ReferenceToExtraFilters}]">list item</$list>

the newer, more performant equivalent became this - macro-ify the entire section and use substitution instead:

\define displayResults(filter) <$list filter="[all[tiddlers]tag[webgraphic]search:keywords{!!keywords}$filter$]">list item</$list>

...

<$macrocall $name="displayResults" filter={{!!ReferenceToExtraFilters}}/>

(I know this syntax is deprecated, so it isn’t best practice…more experienced users are free to comment on how this can be further improved.)

The filters of course needed to be formatted in such a way that they could fit inside a substitution without breaking the main filter, so they exclude the beginning and ending brackets that normally appear in expressions.

In doing this, the lag was reduced from ~7-10 seconds to a mere 0.8-1.5 seconds(!), which is the single largest improvement to performance that I’ve ever achieved with this project. It was an important lesson learned: when working with large lists and especially large lists that require complex filtering, avoid using Filter and other redundant statements unless you really have to, as there are ways to achieve the same effect that are significantly more performant. I’m sure the broader applications of this ‘lesson’ are more complicated than that, and that there are probably better ways to solve the problem than I did. But I am a little proud of my discovery, so I’m sharing it anyway