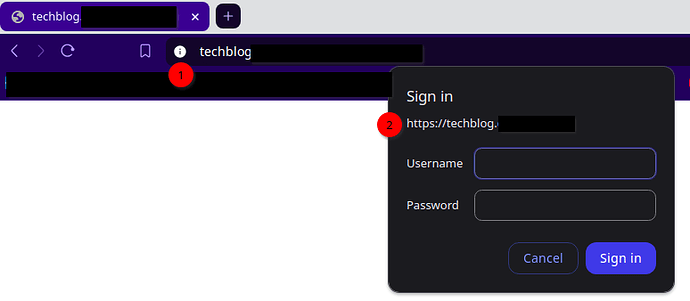

I have a node tiddlywiki with a letsencrypt certificate being served via nginx reverse proxy, but I’ve noticed when I login that the browser address bar does not indicate that the connection is secure (#1 in image), even though the sign in form does (#2). Should I be concerned? Is may password being exposed over an insecure connection at some point in the login process?

If the letsencrypt mechanism works and since the address seems to be https:// – The communication should be secure.

Latest chrome like browsers do not show the secure connection icon anymore. You can open the (i)nfo icon and see what it tells you.

You can test your https:// settings / quality / configuration at: SSL Server Test (Powered by Qualys SSL Labs)

The ssl server test gives me B’s on all tests. I don’t know what that means. There wasn’t any explanation to go along with the test.

And Brave (Chrome) won’t let me select the info icon during the login process. When I do, the apparently secure login popup (shows an https:// address) vanishes and the info icon then says the website is insecure.

Regards,

Captain Packers

Not really. B probably are the default settings. Google’s sites are optimized for advertising and 3rd party add tools and maximum reach.

There are 4 major sections in the summary, which show you where you have room for improvements.

- Certificates

- Protocol Support

- Key Exchange

- Cipher Strength

The summary page also shows a link to the documentation page.

1. Certificates should be green, if lets encrypt settings are done right.

2. Protocol Support

Browsers usually start communication with a site with their most secure protocol available. If the protocol is not provided by the server, they start downgrading. Down to protocols that are known to be unsecure.

Browsers usually behave well. So they do want to connect with a secure protocol.

A malicious provider of an eg: open WIFI hotspot may be a different thing. So your service should only support TLS 1.2 and higher

The linked wikipedia page contains a lot of useful info.

3. Key Exchange and 4. Cipher Strength

Cryptographic keys are used to start communication between the browser and the server.

Keys should be at least 128 bit, to be considered somewhat secure. Many of these setting will probably be outdated, once quantum computers are a commodity. But until then we are good to go.

So your summary should not show any keys that have less then 128 bit. With an A rated server configuration there are already many 128 bit Cipher Suites, which are considered weak. But some of them are needed to connect to older devices.

So it depends, on the usecase of your site. – The Handshake Simulation summary, tells you, which devices are able to connect to your site.

You can start with a very secure setting and “lock down” your site depending on your usecase. Then you can “loosen” the settings until it matches your desired target audience.

Protocol Details

Is probably one of the most important summaries, because it contains information about know attacks. I think it should be OK with your configuration, since you would probably get D or worse if your site would be vulnerable.

My personal conclusion

Since you asked and you probably do care I think:

With a bit of tweaking you should be able to reach an A rating, if you feel better that way.

But – it depends on your service provider, which possibilities you have to tweak the necessary settings. – They should be somewhere in the Security related config section of your user panel.

Or Nginx should have the possibility to adjust these settings too. – But I do not know Nginx well enough.

Hope that makes sense.

-mario

I assumed that since https://www.google.es is served through http3 (which uses TLS1.3 and therefore requires a safe ChiperSuite) is as secure as tech allows.

Probably is an oversimplification

I did run the test and google seems to get B because it allows TSL 1.0 to support old devices, which is needed for their usecase. Their service should be available for basically everyone.

My personal opinion. Limiting the target audience with TLS 1.2 + is good enough for our TW based usecases.

Thank to all for your feedback.

I’m currently running my node on an unecrypted local server port (HTTP). Is it possible to run it on an encrypted local port using HTTPS? If so, does anyone know how to set that up? What would the listen command look like? I know there are some certificate options available, but I’ve never tried them.

Is there a range of ports that will automatically use HTTPS? If you just use one of those in your listen command will all the transmission in and out of TW then be encrypted?

There are basic docs for setting up HTTPS here:

https://tiddlywiki.com/#Using%20HTTPS

The complex part is generating the SSL keys. They are passed using the tls-cert and tls-key parameters, and then the regular listen command is used to start the server.

Why do you want to make everything more complex on a local system? Is it on a network, where others have the possibility to scan your network communication?

Are you saying that the nginx proxy pass from port 443 to 8080 is secure and not likely to be scanned as long as it all happens on the same server (unless of course someone manages to hack you server)?

Regards,

Captain Packers

I’d say it’s by definition not secure, since it’s plain HTTP, but it is safe. If your TiddlyWiki (or any other back end service) is listening on localhost:8080 and Nginx only proxies connections that come in over TLS, then all of the external traffic to that server is over TLS; the connection between the proxy and the back end doesn’t ever leave the server.

If your back end is on a different computer than the proxy, then the communication between the proxy and back end will be unencrypted. If this is a trusted network, then it’s probably safe unless and until you think there’s an intruder with the ability to intercept the traffic. In this case, you could configure TiddlyWiki to use a TLS certificate, so that Nginx proxies to it using TLS over another port (say 8443) and the communication is encrypted over the network between the proxy and back end.

If you’re worried about these sorts of things beyond a theoretical exercise, it’s a good idea to take some time to think about the threat model you need to defend against. Figure out where you’re most at risk, and do things that mitigate that risk. Don’t just apply security controls for no reason (unless it’s just for learning and you’ve got backups of everything important–then go wild!)

If someone has access to localhost on your server you already lost.

TiddlyWiki can start a local server at eg: port 8080 with

tiddlywiki ./edition/your-wiki --listen.

So everyone which has access to your machine can connect to the wiki using

http://localhost:8080/.

If the server has eg: IP 10.0.0.111 you would run it with

tiddlywiki ./editions/my-wiki --listen host=10.0.0.111

Then everyone which is connected to your local network 10.0.0.x can see the wiki if they use http:/10.0.0.111:8080 in the browser.

You only need an Nginx proxy and encryption, if you want to connect your server to the internet.

Right.

I can’t add to that other than: “HOW did you allow local access?”

I didn’t allow access. I’m just thinking service providers could gain access and systems get hacked all the time (not that mine should be a highly visible or high priority target for hackers). If your stuff isn’t encrypted at rest, then it is potentially vulnerable.

Regards,

Captain

Service providers will always have access, except you use a password encrypted single file wiki.

Connection over HTTPS will not encrypt your content at rest. The system we discuss here will only handle encryption for data in transit.

Can you easily convert a node to a single file encrypted wiki and serve it up with nginx by maybe dropping it in the public folder and naming it index.html?

Regards,

Donald Lund

Hi Donald,

I am not really sure, what you try to achieve. You jump between internet facing HTTPS:// questions and “local network” questions. Which is a completely different thing.

So I’ll try to sort it out.

Basic Authentication

From your OP screenshot it seems you do use an internet service / hosting with “basic authentication” enabled.

Basic auth can natively be handled by Nginx. So the dialogue is created from the Nginx proxy. If you pass the challenge, the proxy will forward connections between the browser and the tiddlywiki server.

-

In this case the connection uses SSL encryption between the browser and the Nginx proxy

-

The http:8080// connection between the proxy and your TW server will be secured by the OS user and user-group based access rights.

browser ← https:443// → Nginx proxy ← http:8080// → tiddlywiki server

So all in all security “in transit” should be OK

Security at rest using Nginx as a Proxy

If TiddlyWiki is used in a Node.js configuration, all the tiddlers are stored in “plain text” in the file system.

So again the OS will handle “security at rest” using OS level user and user-group access rights.

In a well configured OS, you as a user, and the Node.js process will have access to your tiddler files in plain text. All other users will have no access. This includes the Nginx proxy. It is only used to forward communication between the internet and the Node.js server.

If configured right. The proxy should run as a different eg: www-user and have no direct access to your data.

There is one exception: system administrators. They will have full access to the system, by definition.

Security at rest using an Encrypted Single File Wiki

In short yes

More details

Eg: A TiddlyWiki Node.js configuration can be used locally on your laptop, to create content. This content will be stored in plain text tiddlers on your drive.

The command to start a local server on the laptop is:

tiddlywiki ./my-wiki-server --listen

Important: As you can see, the tiddlywiki.info file contains the includeWikis section. So the server will only work if you also read the next section of this “how-to” first.

./my-wiki-server/tiddlywiki.info

{

"description": "Server configuration for the my-wiki edition",

"plugins": [

"tiddlywiki/tiddlyweb",

"tiddlywiki/filesystem",

"tiddlywiki/internals"

],

"themes": [

"tiddlywiki/vanilla",

"tiddlywiki/snowwhite"

],

"includeWikis": [

"../my-wiki"

],

"config": {

"default-tiddler-location": "../my-wiki/tiddlers"

}

}

Building a single file wiki

With a ./my-wiki/tiddlywiki.info file similar to the following example, you can easily build a single file wiki and an encrypted wiki with

tiddlywiki ./my-wiki --build

The command above will build both wikis a the default --output output directory. So the output-directory should contain:

- index.html

- encrypted.html

If you want to build only one of them you can use

tiddlywiki ./your-wiki --build index

or

tiddlywiki ./your-wiki --build encrypted

tiddlywiki.info file is just an example, since it contains the password in plain text, which you should not do in production, if others can access that file.

./my-wiki/tiddlywiki.info:

{

"description": "Some general description about my-wiki",

"plugins": [

"tiddlywiki/internals"

],

"themes": [

"tiddlywiki/vanilla",

"tiddlywiki/snowwhite",

"tiddlywiki/readonly"

],

"languages": [

],

"build": {

"index": [

"--render","$:/core/save/all","index.html","text/plain"],

"encrypted": [

"--password", "password",

"--render", "$:/core/save/all", "encrypted.html", "text/plain",

"--clearpassword"]

},

"config": {

"retain-original-tiddler-path": true

}

}

Security at rest using Nginx as a file server

Now that you know, how to create an encrypted.html file, it’s possible to serve that one using Nginx as a file server.

There is a fundamental difference with this configuration. Nginx as a file server, which it can do exceptionally well, it has to have access to your “public” directory. So you need to make sure, that your “Basic auth” password is strong

You can upload your encrypted.html file as index.html to the “public” directory that Nginx can access.

Since the new index.html is encrypted “at rest” on the server nobody will have access to the data in the wiki. Except if you know the wiki-password.

The wiki-password, should be different to the basic-auth password, which is described in the very first step.

IMO that’s pretty much it

Hope that helps

-Mario

What an AWESOME description. This is very helpful. Thanks so much Mario.

Regards,

Captain