Thank you all for your thoughts and comments. You have reinforced my opinion, it is an almost impossible task except for special simple circumstances. To do properly, it requires meaning/understanding which is way outside of the state of today’s processing.

Funny. I conclude the opposite through semi-automation. I will keep this on mind and return if I address this.

- for analysis purposes you could install a large library of words list in a plugin save detected ones and remove the plugins when done.

maybe with a robust implementation of llm/ai in tw this could be a possibility in the future:

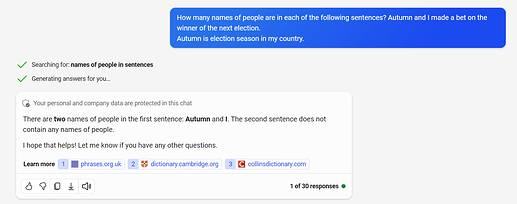

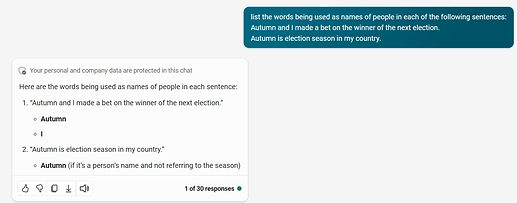

although on repeat trials it looks like sometimes copilot gets confused as well:

That scares the heck out of me!

- Perhaps “not in”, but “along side TiddlyWiki”, with the tools to interact

It is great when tools are effectively linked, but loosely linked, allowing any component to innovate independently.

of course it would be an optional plugin with plenty of customization. but i guess that’s a topic for another thread : )

Yes, on topic this loosely coupled idea was mentioned by me earlier where “word list plugins” are available to eliminate most words that are not proper nouns, thus allowing us to highlight and select proper nouns.

- once done removed the wordlists if desired.

I imagin on a server implementation a word list could be a data tiddler that is also a skinny tiddler, so it is only loaded if it is needed?

Aha, that explains another thread. (Edit: This topic was moved from Searching/indexing web site/page. The current topic is the one described as “another thread”.)

I have no idea if this would be of any help to you, but I just tried to write something that – with many false positives, and probably a few false negatives – identifies proper nouns in a document. I ran Tony McGillick Painter through pdf2go.com’s PDF → Text converter, and then fed the resulting text through this JS function:

const properNames = pdf => [... new Set(

pdf.replace(/\s+/g, ' ').match(/(?=[^])(?:\P{Sentence_Terminal}|\p{Sentence_Terminal}(?!['"`\p{Close_Punctuation}\p{Final_Punctuation}\s]))*(?:\p{Sentence_Terminal}+['"`\p{Close_Punctuation}\p{Final_Punctuation}]*|$)/guy)

.map(s => s.trim())

.filter(Boolean)

.flatMap(s => [

... (s.match(/^(([A-Z]\w+\s)+){2,}/g) || []),

... (s.match(/((:?\s)[A-Z]\w+)+/g) || []),

])

.map(s => s.trim())

)].sort()

to get this result:

[

"At Redleaf Pool", "Aussie Rules", "Botanic Gardens", "But", "Christmas", "Communists",

"Cross", "Domain", "Double Bay", "Edgecliff", "Friday", "HEN", "In", "Italian", "Italians",

"Jewish", "Kings Cross", "Macleay Street", "Manchester", "McGILLICK Painter", "Melbourne",

"Moore Park", "Paris", "Park", "Pool", "Redleaf Pool", "Saturday", "Sunday", "Sydney", "That",

"The Cross", "They", "Tony McGILLICK Painter", "Trumper", "Trumper Park", "Vaucluse"

]

I’m sure we could revise the regexes to reduce the false positives.

Scott

I wrote an app (using Xojo) to do this also and was able to extract all words and most phrases containing capital letters. Makes things easier but when I went to add the tags to the tiddler I found I still needed to see the context of the word or phrase to make sure I got things right. This meant the extraction first saved little to no time/effort.

Might do some more playing though.

Found the TWTones’ suggestion of stop/go words would not be very efficient as I would mis many terms that are also stop terms. Context and hence meaning are crucial

Bobj

Dr. Bob Jansen

122 Cameron St, Rockdale NSW 2216, Australia

Ph: +61 414 297 448

Skype: bobjtls

- not sure what this had to do with this topic?

My suggestion was to use stop terms, eliminate common nouns, verbs and other word sets to identify the remainder as a possible way of finding pronouns.

However you identify text which may contain such, you can still use a preview or snippet of the text and make a method to select and save a set of words even create a matching tiddler.

- My idea would be you progressively turn things you wish to refer to, into tiddlers and thus accrue a large set of tiddlers and the freelinks plugin can thus highlight them.

That’s probably my fault. I responded to the wrong topic. When I participated in the other thread about finding proper nouns, I misunderstood something fundamental. I thought the goal was to find an automated, repeatable way to recognize proper nouns in a string of text, presumably a tiddler. This topic (edit: that is, Searching/indexing web site/page) made it clear that this is for a one-off operation, an attempt to simplify some manual conversion effort. So having a number of false positives, if they’re not overwhelming, would probably not be an issue. And that leads to more possibilities.

I will try to move these posts to that topic.

This meant the extraction first saved little to no time/effort.

I find this an interesting problem. I don’t know if I’ll find time, but if I do, would a version that gave results like the following be helpful?

{

/* ... */

"Botanic Gardens": [

"make me that survive, the Botanic Gardens, the Domain, Trumper Park, Moore Park: they are the "

],

/* ... */

"But": [

"notice it at the time, but in retrospect it seems to have been happy. It is",

"But it wasn’t really the football, it was the atmosphere",

"prepare from Friday night onwards, but going to Trumper is easy.",

/* ... */

],

/* ... */

"Trumper Park": [

"Trumper Park has been persistent in my life.",

"the people who went to Trumper Park on Sunday",

]

/* ... */

}

(or perhaps with the potential proper noun in ALL CAPS?)

The usefulness of this is directly proportional to the usefulness of the underlying PDF → text conversion, which for this document is not great.

But if this might be helpful, I’ll see if I can find the time. As I said, I find it an interesting problem.

Scott,

thanks for all your input, ideas and thoughts. It is greatly appreciated.

As I said before, I have a Xojo app (xojo.com is a flexible programming ide that allows for compilation into osx, windows, ios and android, all from the same source code) that does something similar. It already provides the context that you are proposing, so, unless you are very motivated, I would not suggest you begin on this work.

I need to think all this through in more detail, especially as I am thinking TW might not be the most favourable tool to provide the access to the archive’s documents. I can see this as an exellent Filemaker Pro web site but the problem here is the costs of hosting the filemaker solution.

I am waiting for Google to index the site so I can play around with Google site searching but it seems to be taking some time.

bobj

TW_Tones,

I have to apologise to you for my hasty remarks regarding your stop words suggestion.

After much additional work on my tool, I can now see how stop words will be useful as long as, as you intimated, they are built up over time across documents from the document set. Also, the stop words appear to be only single words so that if ‘And’ is a stop word, it can still be part of a proper noun phrase, such as ‘And Bob’ (the example is nonsensical but you can see my drift).

I think now I also need to save Go words between processing documents, those strings that I consider proper nouns so that in subsequent documents, they do not need to be reprocessed as they have been approved in an earlier document. This requires some more thought on my part though.

bobj

No problem @Bob_Jansen perhaps I think of stop words differently, I mean a list of publicly known stop words such as “the, and, it”.

Warning ! grammar has never being my strong point, but with age I hope comes wisdom

I like your term “go words” I would think of these as words you have nominated or perhaps even “go phrases”.

- I am not saying such go words or phrases could not have stop words included, but when you remove common words, verbs, common nouns a smaller list is available that may relate to a proper noun.

- Lets highlight these “special words” in context, and provide the tool to register them as “go words or phrases” the simplest method is to create a tiddler title.

For example lets say we remove common words and find one in the text “Galactic”, it may be next to a possible verb, “Federation” and when we look at the actual text we see “This was when “The Galactic Federation” was born”. The capitalisation is also a give away, Our attention has being focused here because the word Galactic was identified. We select “The Galactic Federation” and create a tiddler, perhaps indicating it is a proper noun.

- Any future reference to the The Galactic Federation will be highlighted and links made automatic if you use free links.

- Most content and text, will have a limited number of proper nouns and if your attention is drawn to them you can systematically build a list of those in the content, create tiddlers for them, and even store more info in them like a glossary.

- You could even collate all proper nouns used across multiple wikis

- Perhaps you can only highlight “special words” with mid sentence capitalisation.

Through taking an approach like this there is value in identifying potential proper nouns, then select actual proper nouns, and having the opportunity to provide more information eg “The Galactic Federation” `head quarters on Earth". Notice we just found another? “Earth”.

- Using some other ideas rather than create a tiddler just linking eg [[Earth]] it becomes a missing tiddler, and something you can list or retrieve.

- There is other values in seeing “special words” in your text because it helps draw inferences while you are highlighting proper nouns.

- The process is cumulative and the exceptions decrease rapidly over time.

See a discussion I started some time ago that explored some advanced ideas we could implement in tiddlywiki Wordsmithing stop words, verbs past and present tense - #2 by TW_Tones

This subject has led me back to some prior discussions that is all related in a broader sense including Measure of order in a tiddlywiki

Very thought provoking, @TW_Tones. I like the idea of having the ‘tags’ created as tiddlers and then being automatically identified by TW as links. Must play around with this.

bobj

FYI: I am building some word lists and need some regular expression help How to extract words with regular expressions and using this to analyse the tiddlywiki.com documentation, ie to split the documentation into words.

Just a final message regarding this issue.

I have completed my Xojo app which parses text documents for proper nouns and proper noun phrases. I have tried it on a few of my documents and it seems to work well, not 100% but well enough.

I have also added a facility to highlight words that I need to manually inspect, in my case the term ‘exhibition’ which may form part of an exhibition title but not be recognised as a proper noun phrase, for example, ‘21st exhibition of Paper works’. This also works well.

I do not own a Xojo license for producing a compiled app so I can not share a copy of the app. I use the IDE to run a debug session to use the app from its source code (this is free)

many thanks to all who contributed to the discussion.

bobj

If it is possible to share your wordlists and details we could look at implementing this in tiddlywiki. perhaps pseudo code and steps.

Up to you.

Happy to provide the Xojo project file. All you would need to do is instal the free Xojo IDE and the you could run the project.

bobj

The App is now generating JSON files so that loading the content into tiddlers has now been simplified. So all content preparation is done outside of TW which makes things quicker for me.

Thinking about this, it might be a useful addition to TW, structure the TW tiddlers but use the App to generate the content at the tiddler level and then bulk load into the TW file.

Thanks to all who have contributed to this development

bobj