Not a very valuable plugin because AI wrote it in a few minutes.

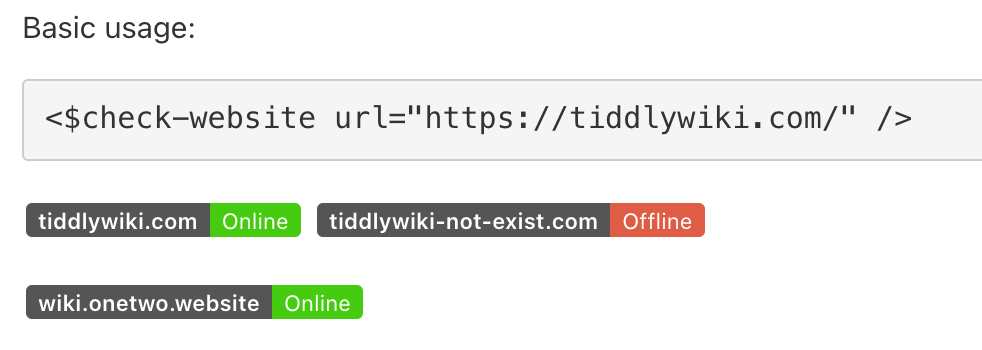

I use it to check availability of my blog digital garden website https://wiki.onetwo.website/

Demo and drag to install: https://tiddly-gittly.github.io/tw-server-sitemap/#%24%3A%2Fplugins%2Flinonetwo%2Fcheck-website

CPL: https://tw-cpl.netlify.app/#Plugin_202310261631806%201

Source: tw-server-sitemap/src/check-website at master · tiddly-gittly/tw-server-sitemap · GitHub

The prompt to write this “high quality” typescript tiddlywiki plugin is

Create a TiddlyWiki plugin to check the availability of a given URL. I want its usage to be similar to providing a widget.

The "interval" is optional, with a default value of 1h. It supports simple formats like 1h30m, but does not support "s" (seconds), as hours and minutes are generally sufficient.

Internally, it will periodically fetch the website to check basic accessibility.

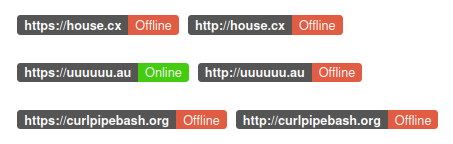

The result will be displayed as a badge, similar to the various badges commonly seen on GitHub project homepages. The content will be a "label". The "label" is also optional; if not provided, the host part of the URL will be used.

Provide usage examples in the readme. All content should be in English. You should refer to https://github.com/tiddly-gittly/Modern.TiddlyDev/tree/master/src/plugin-name to write the TypeScript version. Additionally, CSS can be used to implement the badge style.