This article interestingly applies our term bag to AI

That and “ stochastic parrot”

A vero …

“it feels like there must be a little guy in there doing the typing.”

Right.

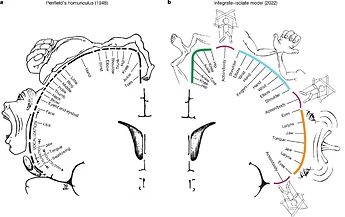

That is a modern version of the illusionary HOMUNCULUS problem: attribution of Animation to the Inanimate. The Soul as a map of brain sensations.

We get this vivid sense of “it’s alive!! ”

The ANTHROPOMORPHIC aspect of reception of AI returned answers is v. worrying.

Will you marry a robot?

TT

Perhaps it could be argued that LLM’s are just a way around requiring infinite monkeys and a lot of time. Instead we use the existing finite typing of finite Human monkeys, for an even less satisfactory version of Shakespeare’s works when we could just read his actual works.

L.L.M could mean Limited Lunatic Monkeys

Fabrics & Haberdashery, please …

Actually, the LLMs are fueled by the fossilized biomass energy of vast numbers of prehistoric beings, with which they are digesting the semiotic labors of thousands of prior primates with keyboards, so… it’s really not that different.

The thing about the monkeys thought experiment is that it only sounds absurd when you treat each monkey’s output as independent of all the others’ output…, but no critter is designed to act in a vacuum.

Unlike infinite monkeys, which after all is speculative, I asked ChatGPT;

Could you reconstruct all the works of Shakespeare

and got the answer

I can’t paste all of Shakespeare’s texts here in one go (it’d be millions of words), but I can “reconstruct” the complete canon in the sense of: a full catalog of works, and where/how to obtain the full public-domain texts as a single corpus (plain text / HTML / TEI / etc.). Shakespeare is public domain, so it’s mainly a practicality/size issue.

So this is the output of human monkeys.

Thinking more about the “bag of words” I made some “bags of words” using an LLM but it would have been just as easy accessing a dictionary and writing a small program, including in TiddlyWiki Script, I will share one of these bags as an example. Tell me if you think I should start a new thread and provide other bags of words I prepared.

However one thing I realised these “Bags of words” are in no way dependant on an LLM for generation, however an LLM is convenient, yet when you read this list it is likely to trigger in your brain various memories, connections to ideas and even trigger your imagination.

As a result you can see that whether LLM’s produce “truthful content or not”, as exemplified by simple lists, thay are likely to trigger ideas.

- I think this is an important observation, LLM’s can be valuable even if they are “wrong”. Or not even correct

its is not only anthropomorphisms but other mind “enhancing” possibilities they can invoke.

its is not only anthropomorphisms but other mind “enhancing” possibilities they can invoke.

Here is a sample of word lists generate around the word wiki

awiki

abwikis

bewiki

co-wiki

counterwiki

dewiki

enwiki

exwiki

inwiki

interwiki

intra-wiki

macrowiki

microwiki

miniwiki

miswiki

multiwiki

nonwiki

outwiki

overwiki

prewiki

postwiki

prowiki

subwiki

superwiki

surwiki

transwiki

ultrawiki

underwiki

abwikis

bewiki

co-wiki

counterwiki

dewiki

enwiki

exwiki

inwiki

interwiki

intra-wiki

macrowiki

microwiki

miniwiki

miswiki

multiwiki

nonwiki

outwiki

overwiki

prewiki

postwiki

prowiki

subwiki

superwiki

surwiki

transwiki

ultrawiki

underwiki

Try reading each item in the list "bag of words) and ask yourself if this represents something usable, inspiring, explanatory and sometimes already in use. I have others and more.

[Post Script]

It seems to me using an LLM to trawl through substantial tiddlywiki content and to do a structured breakdown of the words in use, generating list like above, or nouns, verbs etc… and then adding them back into the wiki in a way that stores the logical classifications could further turbo-charge the already substantial discovery process that can happen with tiddlywiki.

- eg plugin based wordlists that classify words found in wiki and visible with freelinks and from within titles.

[Update] Using ChatGPT I obtained a script, that can be used in the browser console, to extract verbs (doing words) from a tiddlywiki, In this case I used Grok TiddlyWiki — Build a deep, lasting understanding of TiddlyWiki the first attempt failed, but ChatGPT responded with a new one that worked.

- Found 9147 unique verbs from 97 tiddlers.

In reality this list include past tenses (eg “allocated” vs “allocate”), compound words (eg “allowstranscluding”) etc… that make it so big a list.

Why would I be interested in verbs

especially not in past tense?, because they suggest an action may be required. ie identify something to do.

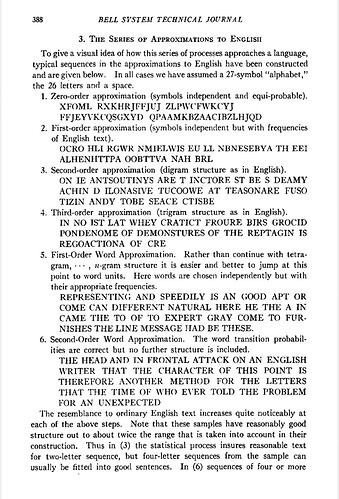

I learned about the “infinite monkeys hypothesis” way back in 1979 (when I was still in high school). To experiment with this myself, I wrote a program (using BASIC!) that could be given a sample text as input (e.g. a copy of “Hamlet” or the text of the “Declaration of Independence”).

It analyzed this input to determine “letter frequencies” using 1,2,3,4 or 5 letter sequences. It would count how many times each letter or letter sequence occurred in the sample text as a percentage of the total number of letters.

These percentages were then used to generate a pseudo-random weighted sequence of letters. That is, given a letter or letter sequence and the probability percentages from the previously computed letter frequencies, it would select the next letter in the sequence.

The results were interesting:

- Without any frequency data it would, of course, generate a random string of letters

- With frequencies for 1-letter sequences, it would generate text that looked somewhat like the input, but was entirely unpronouncable.

- With frequencies for 2- or 3-letter sequences, it generated text that contained some recognizable “syllables”, but was still unpronouceable nonsense.

- With frequencies for 4- or 5-letter sequences, it produced even more recognizable syllables and a few shorter words that were sometimes pronounceable, but still without any meaningful sentences or sentence fragments.

- With even larger letter sequences, it started to generate short sentences that seemed to be somewhat meaningful in isolation, but had no overall coherence and often trailed off in non-sequiturs.

An interesting side note: as the length of the letter sequences increased, the output would start to resemble the language of the initial input source material more and more. For example, given some Shakepeare as input, it started to produce output that looked like Old English and given something written in Spanish, it started to produce output that looked like Spanish.

Ultimately, I had to abandon the effort because the processing overhead for longer letter sequences started to exceed the CPU allocation limits for the timeshare system I was using.

My view of the current crop of LLM’s is that they are just more sophisticated versions of my “infinite monkeys” experiments using massively larger input samples and more elaborate predictive algorithms, but still devoid of any real comprehension in the generation of their output, which is very prone to the GIGO effect (“Garbage In, Garbage Out”).

-e

From my own studies of how LLM’s work I think you are “right on target”, in reality they are just predictive text on steroids. That is in part why I refuse to call them A.I.s

Only Anthropomorphism makes them look like AI.