This thread got me experimenting with local LLMs and TiddlyWiki. I feel there’s lots of exciting potential here that can be realized with further development around integrating TW content and LLMs.

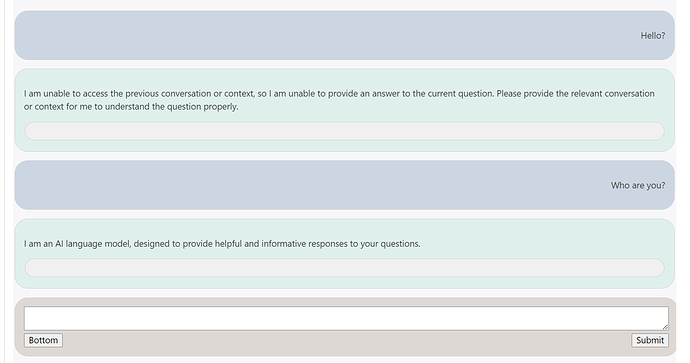

First I checked out and successfully ran the AI tools plugin @jeremyruston linked. I was able to get an AI Conversation going with LLlamafile from within my TiddlyWiki quite easily.

However, some further exploration led me to LM Studio (Getting Started | LM Studio Docs) and IMO it’s a much more convenient option for discovering LLMs, running them locally, and also for integrating with AI tools plugin for a few reasons:

- It has a convenient UI that includes a catalog of available LLMs that you can browse and download with a click.

- Once downloaded you can easily run them in LM Studio using a familar chat interface.

- GPU offload is supported.

- You can start an HTTP server that has Open API like endpoints so it’s like a local drop-in alternative to Open API from an API endpoint perspective: Local LLM Server - Running LLMs Locally | LM Studio Docs. I was able to just clone the AI tools plugin openai server adapter ($:/plugins/tiddlywiki/ai-tools/servers/openai) and change the caption, and change the url to http://localhost:1234/v1/chat/completions.

@JanJo you may find LM Studio + TiddlyWiki an useful combination.

Now Anything LLM and its RAG (Retrieval Augmented Generation) capabilities feel like the ideal bridge between LLMs and a TiddlyWiki workflow because RAG allows you to upload your own docs, PDFs, and text files so you can query the LLM on its original trained knowledge base, as well as your personal files that you upload. This is obviously the type of workflow @Zheng_Bangyou was experimenting with, and it feels like there’s huge potential there.

I am running my TiddlyWiki on the NodeJS server so individual tiddlers are stored on the filesystem as *.tid files. I experimented a bit with uploading all of my Journal tiddler *.tid files into an Anything LLM workspace and asking it questions like “What was the first day I started working on project xyz, and how many days have I been working on it?” I also uploaded a datasheet PDF for an IC chip and asked for some details from the datasheet.

Note: I had to make a minor modification to Anything LLM code so that it would accept *.tid files and treat them as plain text.

Some of this initial exploration of workflow options was outside of TiddlyWiki, but Anything LLM can use LM Studio (among many other choices including Open AI) as the LLM backend, and the TW AI tools plugin can also use LM Studio as its backend, so it seems there are quite a few ways for workflows between TIddlyWiki, Anything LLM, and LM Studio (or other LLM backends) to work together.

Anything LLM has the concept of workspaces where you can upload your own documents, and then those documents become part of the context in your AI conversations / LLM chats.

Enhancing the AI Tools plugin to be able to directly add TiddlyWiki content (e.g. maybe upload all tiddlers with a certain tag) to an Anything LLM workspace could open up some really interesting possibilities. I haven’t looked closely at @Zheng_Bangyou’s plugin yet, it may be doing exactly this.

For anyone curious here’s a pretty easy to follow introduction to setting up Anything LLM and LM Studio: How to implement a RAG system using AnythingLLM and LM Studio | Digital Connect

FWIW I’m doing this on Linux, and I’ve been playing with the Mistral Nemo 2407 (12B parameter model).

and events are unfolding rapidly.

and events are unfolding rapidly.

Because I loved it and cannot find any competitors for it in sence of comfortable UI

Because I loved it and cannot find any competitors for it in sence of comfortable UI