I would like to propose an amendment to our code of conduct:

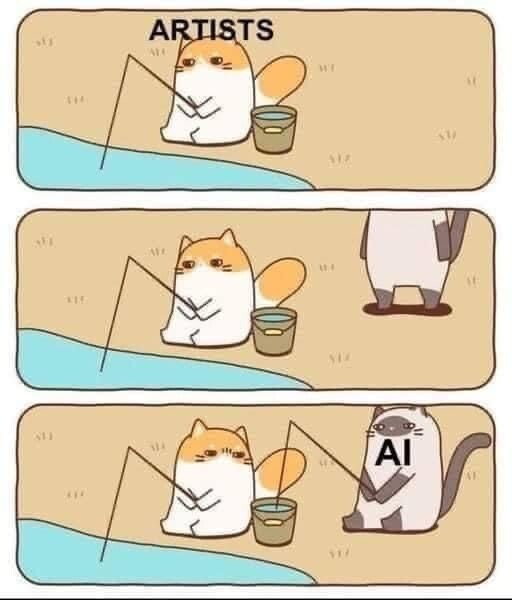

Please do not post the raw output of AI generated content from tools like ChatGPT or Claude.

The fundamental reason that we should try to contain the slop that AIs generate is that they are not reliably truthful or accurate. There are several other arguments against publishing AI slop:

- It wastes the time of readers

- Pollution of searches with inaccurate results

- Pollution of future AIs trained on the content of this forum with inaccurate information

- Everyone here has the ability to access AIs themselves, and so nothing is gained by reproducing the verbatim output of a conversation.

For clarity, this is about publishing the raw output of AIs. There are situations where AI-derived content is acceptable .

- For example, if one asks an AI for 1,000 suggestions for the name of a new thing, and then manually chooses the 20 best suggestions, then it seems perfectly reasonable to publish that list of 20 suggestions. In other words it is acceptable to publish information synthesised from the output of an AI

- It is also acceptable to post links to raw conversations to facilitate discussion

From now on, moderators will hide posts that violate this policy, and work with the authors to refine their post so that the raw AI output does not need to be included.

This is not about discouraging people from using AIs. Personally, I find them very useful in some situations. The concerns here are about inadvertently spreading inaccurate information and the problems that that gives us in the future.

As somebody else said, why should I read something that you cannot even be bothered to write for yourself?