Just an update on the the review plugin tool now that I have been using it for six weeks or more.

I now have close to 600 tiddlers in my review pool, some paragraphs and some entire articles, that’s 600 out of a total of over 2000 tiddlers, it’s a big wiki, last time I worked it out I think I estimated it is equivalent to five Bibles in terms of text.

In some ways I am a bit exhausted, it never sleeps and every day throws ten or more tiddlers at me that it thinks I need to review. Actually that is incorrect - I set the review interval in days so actually it is past-me that judged two weeks ago or maybe three weeks ago that this would be a good time for present-me to re-read xyz. I guess all I am saying is that like many creations it seems to take on a life of it’s own, all I do is hit the increment or decrement review interval on tiddlers but as a result a whole future morning’s research changes and relationships are briefly exposed at the surface as each unique list of review tiddlers are considered one after the other giving opportunity for cross-tiddler ideas to emerge.

Of course it would be a mistake to characterise my additional activity as being confined to an expanding pool of 600 review tiddlers, they all contain links and references to other tiddlers and often rather than reviewing the 20 or 30 tiddlers I should review today I end up looking at only one of them…on a trail sorting out some connection or trying to find a tiddler that really should be referenced to the current one, quite often a great deal of work emerges but only one tiddler gets ticked off out of the “overdue a review” list.

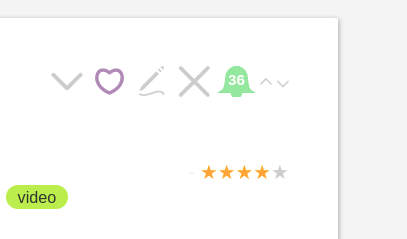

Unlike a paper calendar “todo” list - if a tiddler due for review today does not get reviewed then it’s still on the list tomorrow so it keeps me on my toes, there is no current way of saying “look I am on vacation please stop sending logs down the river” - the only way to avoid a large “log jam” is to say to myself “I haven’t got time right now” - I will simply hit the ‘reviewed’ (red bell) button even though I did not invest much time in the review, sometimes just reading the tiddler title is better than nothing, it’s often enough to cause a certain level of recollection.

In summary my Tiddlywiki quality is going up a lot faster than before for the following reasons…

-

Each tiddler gets reviewed, I correct mistakes, add tags that perhaps did not exist when the tiddler was created.

-

I spot more opportunities for links between tiddlers that are ‘crying out’ to be made.

-

Despite of the fact that my head gets a bit dizzy.

-

But I am making deeper progress in my research, it’s like fossil hunting for connections, my excavations are getting deeper and my ‘finds’ are getting better catalogued and cross referenced.

The workload is defined by the number of tiddlers in the review pool and the frequency expressed in days that I judge the tiddler needs to be revisited. For a new tiddler I might set the frequency at only 3 days, I then extend this over time, in general with all reviews I am asking myself questions about whether the information was current in my head OR easily recalled once prompted OR forgotten.

For quite a while now I have used a system of highlighting ( looking just like neon highlight pens ), a faint purple for really important and a faint yellow for very important - this is proving very useful when reviewing article length tiddlers. If perhaps four or five of these land on my “review today” pile then initially I tended to read the whole article but now I have realised there is an art in highlighting which arises naturally out of a decision to read the whole article once in a while but mostly just to scan the highlighted text - to see if I remember the core points or not. When I read the whole article I am asking myself the question whether the highlighted text does the information being present justice or do I really need to highlight some other key points because I know that 90% of the time I will probably only have time to read the highlights when reviewing. Of course this is nothing new, it’s what highlighting is all about but this particular way of working has really crystalised this for me.

One thing that constantly pops up is the observation that when I mentally say “yeah yeah - all familiar I still get all of this bit” I then spot an important caveat or qualifier that actually I had forgotten about or at least that qualifier was buried deeper in the swamp I call a mind than the other bits. This is the classic thinking you know something but realising what you actually know is your own version of it - and your version is incomplete or includes a distortion.

This is so much more than a simple old school review card system.

-

The user chooses the review interval and will often change the interval for a particular tiddler over time, there is a feedback loop here governed by the user’s own assessment of their recall, the actual value of the information and so on.

-

The user can adjust the content of the card each review, spot relationships they did not spot before and add new links.

-

It’s not all about recall - sometimes I give a review period of a day simply as a way of flagging something that is in progress and needs finishing off, once finished I will then set a review interval based on different factors.

Ranking And Quality

My whole interest in this area started with things like star ratings, numeric ratings ( I have tags called 1, 2 and 3 ) and so on, I was constantly searching for a way to somehow rank tiddlers but at the same time confused as to what I was really after, I was reacting to the ability of tiddlywiki to allow me to collect and collate more information and ideas than my brain was able to hold or at least recall at any given moment. There is of course a hope that as research shows more connections and leads to greater understanding then I will find a more manageable subset makes itself known.

I realised that this review frequency concept was simply a subconcious answer to the question "well if you had a perfect ranking system what would you actually use it for? One answer was simply how often I should re-read certain tiddlers, so I by writing this plugin I evaded the ranking question and moved onto the next step directly.

So how I evaluate the review plugin as a ranking system is a back to front question as long as I make the assumption that ranking only has application for determining which chunks of information get the most attention.

There is a good probability that a really important tiddler will have a short review interval.

There is a good probability that a low importance tiddler will have a long review interval ( max 99 days ).

But there is a good probability that a really important tiddler that I hardly ever forget will also have a long review interval.

So it’s not strictly true that the review interval idea is a true ranking system but it still has value in that direction.

So really I have more questions than answers here but at the same time I have progressed.

Ranking Inflation

I noticed that one of the problems I faced with the star and numeric ranking systems I was using was that after a while I tended to give the new or latest idea a high ranking, the feeling was that I hardly ever looked at lower ranking tiddlers anymore and so really I was just consigning an idea to the bottom of the pond if I gave it a low ranking. The instinct was if in doubt don’t discard an idea so give it a higher ranking than it really deserves. Very often the thing we are looking at ‘now’ seems more important just because it is current.

Somehow the review interval concept is working much better in this area. I often give a newly created tiddler that has a medium to low importance a review period of say 3 days when first created but then as it keeps on cropping up in the review list to be looked at today I think “too often! - not enough value here!” and so I increase the review interval and see how that works out. I know I am not consigning the information to the bottom of the pond - as long as the tiddler stays in the review pool it will get looked at now and then - the max interval is 99 days so nothing stays on the bottom left to rot - everything gets a second chance eventually.

So yes as far as ranking inflation goes I would say “problem solved”.

Future:

There is one minor “buglet”.

A tiddler will display both a Green Bell and a Red bell on it’s review anniversary - the day it needs reviewing. It should only display the red one. The system is perfectly workable but I would like to get it right, I will need to call on gurus to help me with the syntax. The issue boils down to the use of the exclamation mark ‘!’ in filter syntax using the ‘days’ operator which is not co-operative in the sense that it complicates the creation of what we might call pseudo “IF-ELSE” filter statements.

Once the buglet is solved I will put out another “json” export as done previously above, I don’t expect many people will have a use for the tool but I think those who do may find it interesting to work with.

Future Versions:

The original versions did not alter the “date last modified” field of a tiddler for any of the review operations - this was intentional.

Whilst I was on vacation, with my single file Tiddlywiki on my phone ( I usually work on my laptop ) I realised I wanted to know when I got back which tiddlers I had reviewed and to preserve any review interval changes.

This made me realise that these decisions need to be options - should the following actions cause the tiddler date last modified to be changed?

- Adding a tiddler to the review pool

- Changing the review interval

- The act of confirming a tiddler has just been reviewed

In the end I did not perform any manual syncing, I decided to simply adopt the newer version on my phone and transfer to my laptop but I think this flexibility should still be an option.)

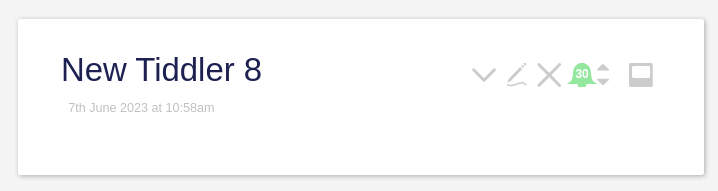

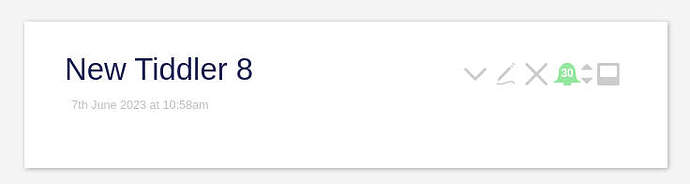

it does indeed allow the up and down icons to be aligned without leaving an idiosyncratic space before any other icon that might follow.

it does indeed allow the up and down icons to be aligned without leaving an idiosyncratic space before any other icon that might follow.